Meta Glasses 2025: Are Smart Glasses Finally Ready for the Mainstream?

By Jalaj Shah

September 26, 2025

Quick Executive Summary

Meta’s 2025 Ray-Ban Display smart glasses, paired with a forearm Neural Band that reads subtle muscle signals, introduce a compact, glanceable color HUD, camera, audio, and AI integration in a consumer-grade Wayfarer frame. For enterprises, that hardware unlocks practical AR workflows: phone-free navigation, remote expert streaming, and direct overlays of digital twin telemetry. This article is a step-by-step enterprise playbook showing how The Intellify turns Meta smart glasses into measurable operational value.

Why enterprises should start pilots with Meta smart glasses now

Meta’s Ray-Ban Display represents the most commercialised attempt yet to pair AR display hardware with an ecosystem of apps and an AI assistant. The package (glasses + Neural Band) starts at $799 and is available in the U.S. from September 30, 2025, a price and launch window that Meta and multiple outlets confirm.

From an enterprise perspective, that matters because the device now combines three things companies need to run hands-free workflows: a reliable POV camera, a private glanceable display for step prompts/alerts, and non-visual input (EMG gestures via the Neural Band) to advance steps without touching screens. These features reduce cognitive friction and unlock practical field-use cases across maintenance, warehousing, inspections, and training.

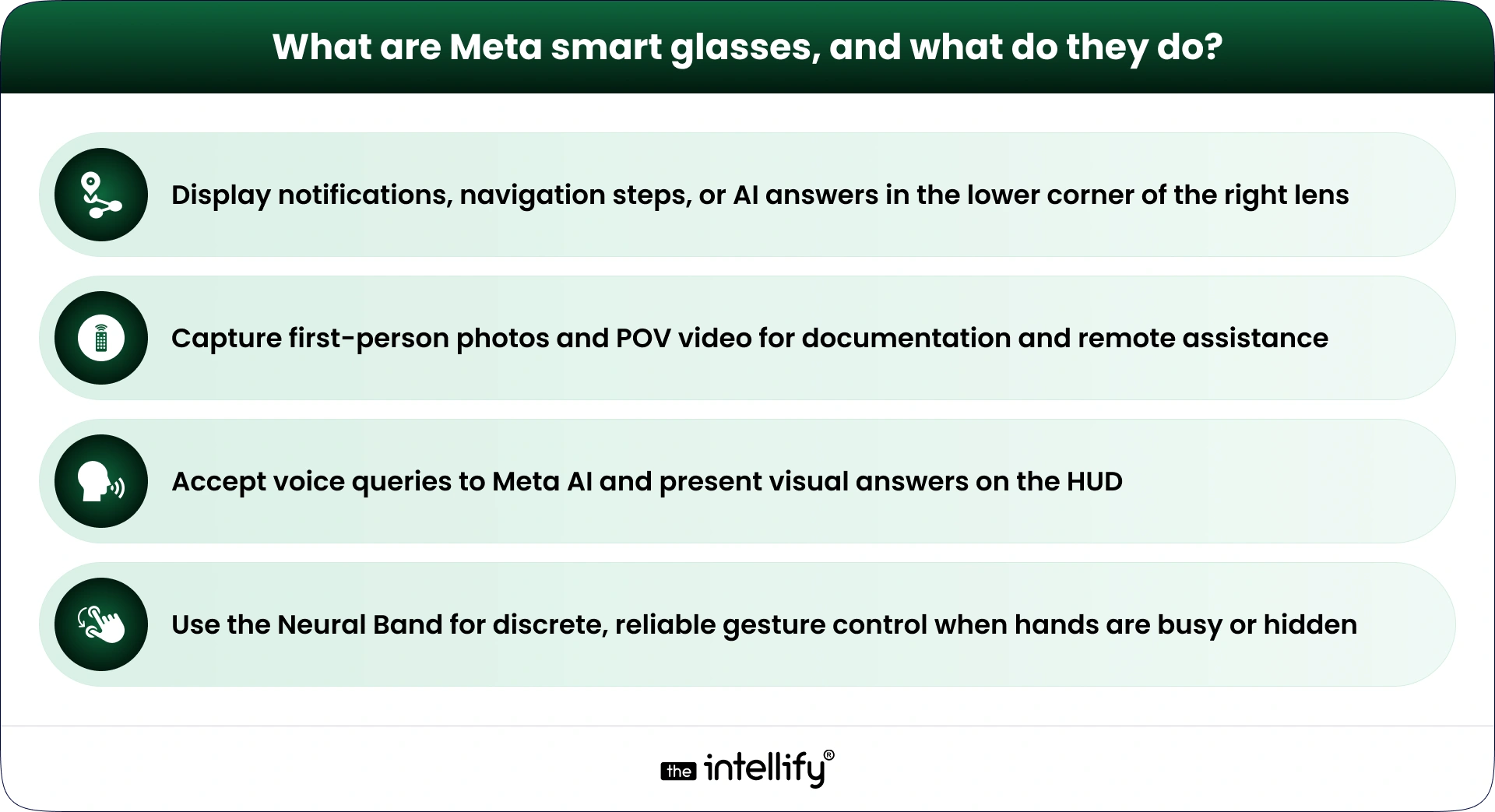

What are Meta smart glasses, and what do they do?

Definition

Meta smart glasses are AR-enabled eyewear that place digital information in a small heads-up display inside the lens while providing capture (camera), audio (open-ear speakers), and control (touch, voice, and EMG gestures). The core promise is “glanceable” information, quick, contextual snippets that keep workers focused on their physical task.

Key on-glass capabilities

- Display notifications, navigation steps, or AI answers in the lower corner of the right lens.

- Capture first-person photos and POV video for documentation and remote assistance.

- Accept voice queries to Meta AI and present visual answers on the HUD.

- Use the Neural Band for discrete, reliable gesture control when hands are busy or hidden.

Industry context: why now? (market reality & forecasts)

Several industry trackers point to a rapid, uneven evolution of XR hardware: a short 2025 softness in some headset shipments is expected to be followed by a strong rebound in 2026 and beyond as newer devices ship and ecosystems mature. IDC notes a multi-year compound annual growth trajectory for AR/VR that presents a large runway for smart-glass adoption, particularly when enterprise pilots produce measurable ROI.

In short, the market drivers are cheaper computing, better displays, improved gesture/voice interfaces, and enterprise demand for remote assistance/training line up now. That’s why organisations that pilot early can help shape the app ecosystem and capture measurable productivity gains before broader consumer price drops.

Core enterprise value propositions for smart glasses

When Intellify evaluates AR pilots, we focus on three quantifiable benefits:

1) Faster mean time to repair (MTTR) and fewer errors

Hands-free step prompts and live remote expert streams reduce search time and missteps. Typical AR pilot results in the industry show MTTR reductions of 10-40%, depending on complexity and baseline maturity. (We calibrate expectations for each client.)

2) Faster training and better knowledge transfer

First-person recordings and AR-guided SOPs reduce time to competency and preserve tribal knowledge. Enterprises report rapid onboarding improvements when SOPs are converted to bite-sized on-glass sequences.

3) Safety, compliance, and auditability

Automatic time-stamped captures and in-line checklists make audits easier and enhance incident reconstructions. For regulated industries, that alone can justify pilots when risk reduction maps to tangible cost avoidance.

The Intellify pilot blueprint (KPI-driven)

A practical, low-risk pilot converts interest into measurable outcomes. Our proven structure:

Phase 0: value stream selection (Week 0)

Choose a high-impact workflow (e.g., motor repair, electrical inspection, warehouse picking) and set baseline KPIs: MTTR, first-time-fix rate, training time, error rate.

Phase 1: feasibility & design (Weeks 1-2)

Map device model (Meta Ray-Ban Display vs. camera-only Ray-Ban/Oakley), plan connectivity (Wi-Fi/5G/edge), and decide which digital twin telemetry or CMMS fields to surface on-glass.

Phase 2: rapid prototyping (Weeks 3-6)

Deliver a minimum viable AR app: inspection checklist overlay, remote expert stream, and a digital twin overlay showing live telemetry (temperature, vibration, error codes). Author SOPs into glanceable steps that advance via Neural Band gestures or voice.

Phase 3: controlled pilot (Weeks 6-12)

Deploy to 10-30 users, capture KPIs, iterate UX to tune gesture sensitivity, voice fallbacks, and offline behaviour in low-connectivity zones.

Phase 4: measurement & scale plan (Weeks 12-14)

Deliver a KPI report, ROI model (labour hours saved, downtime avoided), security runbook, and a clear rollout roadmap.

Architecture: reliable, secure, resilient

A robust deployment balances latency, security, and offline resilience:

Device layer: Meta Ray-Ban Display + Neural Band: on-device caching for SOPs and local recording.

Edge/gateway: Local inference nodes for object recognition and low-latency overlays in connectivity-constrained facilities.

Cloud: Digital twin back end, AI/ML services, long-term video archives, and role-based access.

Enterprise systems: Secure integrations to CMMS, ERP, a nd identity providers for work order synchronisation and audit trails.

Management: Device provisioning, firmware control, and lifecycle planning (repair, spares, prescription options).

This architecture ensures essential functionality continues offline and syncs when connectivity is restored.

Security, privacy & compliance (non-negotiable)

Smart glasses raise well-founded privacy concerns, as cameras and always-on microphones can trigger regulatory and social friction. Leading practices The Intellify enforces:

- End-to-end encryption for streams and stored video.

- Visible recording indicators, strict opt-in recording policies, and short-lived session tokens for remote assist.

- Data residency controls and retention policies to meet GDPR, HIPAA, or industry rules.

- Pen testing and secure code review for edge components.

Proactive privacy and governance are essential to win stakeholder trust and regulatory clearance, especially for public-facing or healthcare pilots.

Cost, lifecycle & ROI considerations

Unit price Meta Ray-Ban Display starts at $799 (includes Neural Band); Oakley Vanguard sport model is $499; camera-only Ray-Ban Gen-2 trims start lower. Use these numbers when modelling capex.

TCO factors include accessories, charging infrastructure, device management licensing, connectivity upgrades, training, and replacement cadence (3-5 years typical). The Intellify builds conservative and aggressive ROI scenarios mapping labour savings, downtime reduction, and error-cost avoidance to procurement decisions.

Practical example: digital twins + smart glasses

Imagine a pump inspection workflow: The Technician dons the Meta Ray-Ban Display and opens the pump’s SOP.

- The HUD shows the next step and an overlay of the pump’s live vibration and temperature (pulled from the asset’s digital twin).

- If anomaly thresholds appear, an AI diagnostic prompt shows likely causes and parts required.

- If uncertain, the technician starts a live assist stream; a remote SME views the POV, marks hotspots, and guides repairs.

- Completion is pushed to the CMMS and archived with a time-stamped video for audit.

This workflow reduces MTTR, improves first-time fix rates, and feeds the twin with validated inspection outcomes, closing the loop between the physical asset and its virtual counterpart.

Competitive & adoption outlook (what to expect in 2026-2028)

Meta’s 2025 launch marks a turning point but is a step, not the finish line. Other platform players, Snap, Google/partners, and emerging AR specialists are pushing competing hardware and developer platforms. IDC and market trackers anticipate strong growth driven by improved ergonomics, lower price points, a nd richer developer ecosystems over the next 2-4 years.

For enterprises: now is the time to pilot and define standards. For consumer mass adoption, expect incremental evolution (larger FOVs, longer battery, slimmer designs) through 2026 and beyond.

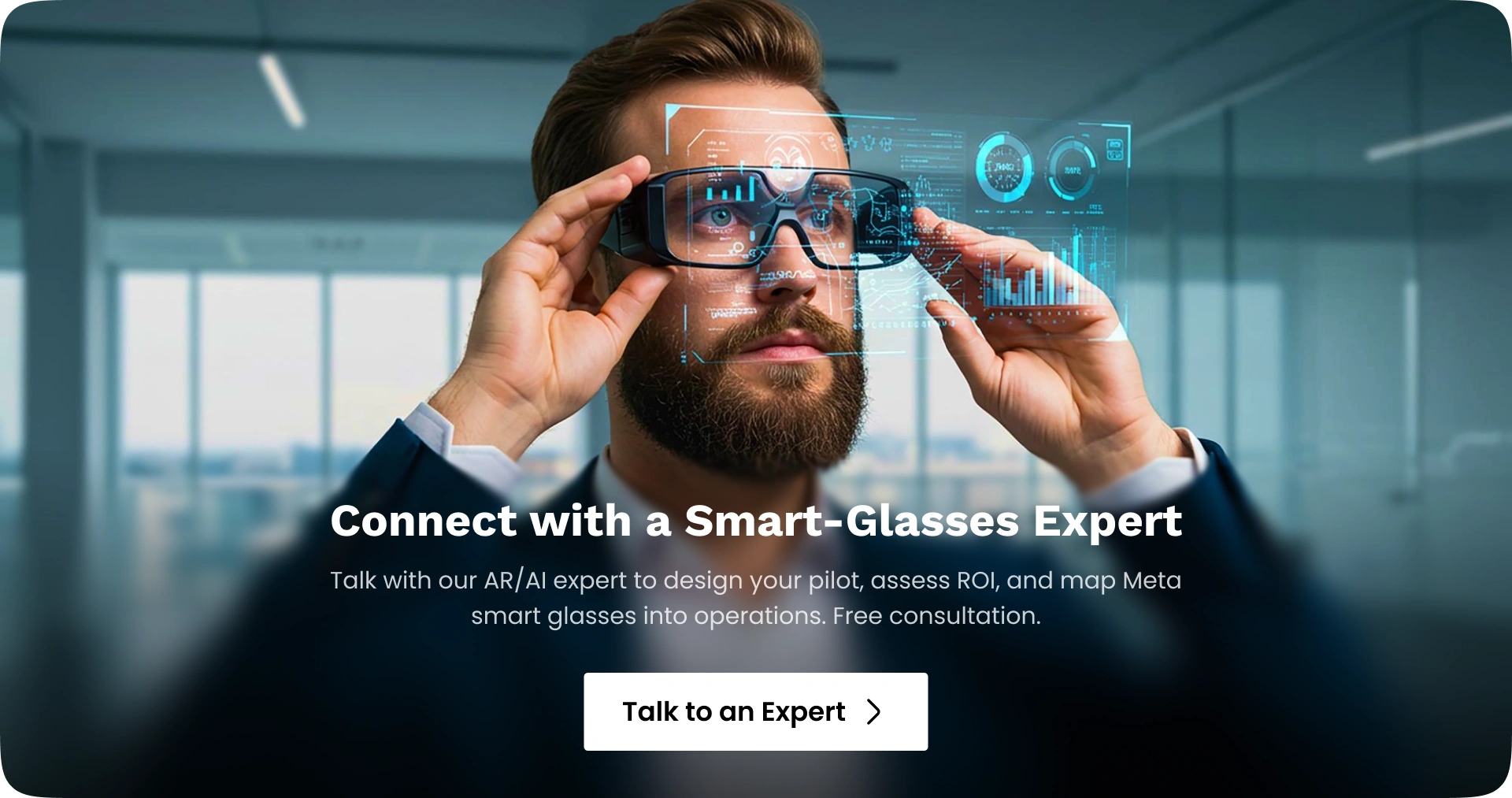

Conclusion & CTA

Meta’s Ray-Ban Display and Neural Band bring a pragmatic, enterprise-grade feature set to market: glanceable displays, robust capture, and discrete EMG control. Those capabilities make smart glasses a practical tool for pilots in maintenance, inspection, training, and logistics, especially when integrated into digital twin and AI back ends.

Ready to pilot? The Intellify specializes in AR/VR, AI/ML, and digital twin automation for enterprise pilots that deliver measurable KPIs. We provide a 90-day Proof-of-Value package: value-stream selection, a working AR workflow, CMMS integration, and a full ROI report. Contact The Intellify to get a tailored pilot proposal:.

FAQs

1. What are Meta glasses?

Meta glasses are AR-enabled smart glasses (like the Ray-Ban Display) that combine a small heads-up display, camera, audio, and AI features to deliver glanceable information and hands-free workflows. (keywords: what are meta glasses, meta smart glasses)

2. What do Meta glasses do?

Meta glasses show notifications, navigation, live translations, and AI answers on a tiny HUD, capture POV photos/video, and allow hands-free control via voice, touch, or the Neural Band EMG gestures. (keywords: what do meta glasses do, metaAIi glasses)

3. How much do Ray-Ban Meta glasses cost?

The Ray-Ban Display (Meta’s display-capable model) launched at $799, including the Neural Band; other Ray-Ban Meta variants and sport models (Oakley Vanguard) have lower price points. Check official retail channels for exact regional pricing and bundles. (keywords: meta glasses price, meta glasses cost, ray ban meta glasses)

4. Are Ray-Ban Meta smart glasses worth buying in 2025?

If you need hands-free workflows, live remote assistance, or glanceable AR for enterprise tasks, they’re a strong early option; for casual consumers, consider whether the current HUD size, battery life, and app ecosystem match your everyday needs. (keywords: RayBan Meta smart glasses, meta glasses review, are meta glasses worth it 2025)

5. What are the main Meta glasses features?

Key features include a color in-lens display, 12MP POV camera, open-ear audio, on-frame touch and voice, and accessory Neural Band for EMG gesture control enabling navigation, messaging, live translation, and AR overlays. (keywords: meta glasses features, meta ray ban glasses)

6. Can Meta glasses work with iPhone and Android?

Yes, Meta’s smart glasses pair with Android and iPhone for notifications, app connectivity, and AI features, though some integrations may differ between platforms. (keywords: meta glasses app, meta glasses compatibility)

7. What is the battery life of Meta smart glasses?

Battery life varies by usage: active camera/streaming and display use drains faster; typical mixed-use day runtime is industry-dependent, plan for charging cases and power management when deploying at scale. (keywords: meta smart glasses, smart glasses battery life)

8. Are Meta glasses private and secure?

Meta includes privacy options, but enterprises should implement E2E encryption, visible recording indicators, opt-in recording policies, and governance controls to meet GDPR/HIPAA and reduce social concerns. (keywords: meta glasses privacy, smart glass technology, meta ai glasses)

9. Can Meta glasses integrate with enterprise systems and digital twins?

Yes, Meta glasses can be integrated into CMMS, ER, P, and digital twin platforms to surface live telemetry, work orders, RSs, and SOP overlays for field maintenance and inspections. Intellify builds those integrations for measurable ROI. (keywords: meta glasses integration, digital twin, meta smart glasses enterprise)

10. How do Meta glasses help field service and maintenance?

They deliver hands-free SOPs, live remote expert streaming, and on-glass telemetry overlays, reducing MTTR, improving first-time-fix rates, and accelerating technician onboarding. (keywords: what do meta glasses do, meta glasses use cases)

11. Where can I buy Ray-Ban Display (Meta) glasses?

Meta’s Ray-Ban Display launched via select retailers (Ray-Ban stores, Best Buy, and authorized optical partners) and will expand to additional markets; check Meta’s product pages and official retailers for current availability. (keywords: where to buy Ray Ban display, meta Ray Ban glasses)

12. Will smart glasses be mainstream by 2026?

Adoption is incremental: enterprise pilots and improved hardware will accelerate use in 2026, but mainstream consumer adoption depends on larger FOVs, longer battery life, lower price points, and a richer app ecosystem. (keywords: smart glasses 2026, are smart glasses mainstream)

13. What are the best smart glasses for enterprise use?

The best smart glasses for enterprise depend on the use case; display-enabled models (like Meta’s Ray-Ban Display) suit glanceable AR and AI workflows, while camera-only or sport models suit capture-first scenarios. Evaluate on battery, ruggedness, SDK support, and integration capabilities. (keywords: best smart glassesMetata glasses review)

14. Do Meta glasses support real-time translation and captions?

Yes, one of the core features is live translation and captioning, showing subtitles or translated text on the HUD to facilitate conversational use across languages. (keywords: meta glasses features, meta glasses app)

15. How should businesses pilot Meta smart glasses successfully?

Start with a 90-day pilot focused on a single value stream (e.g., inspections), define KPIs (MTTR, first-time-fix, training time), use edge/cloud architecture for resilience, enforce privacy/security policies, and iterate before scaling. Intellify offers turnkey pilot programs to accelerate this process. (keywords: meta smart glasses enterprise, how to pilot smart glasses, meta glasses cost ROI)

Written By, Jalaj Shah

The COO and Co-Founder of The Intellify. Jalaj enjoys experimenting with new strategies. His posts are fantastic for businesses seeking innovative development ideas. Discover practical insights from his engaging content.

Transforming Legacy Application Modernization with AI and Automation

Summary: Legacy Application Modernization helps businesses upgrade outdated systems while maintaining operational stability. Many enterprises face technical debt, scalability challenges, and security risks that limit growth. By combining AI-driven automation with structured modernization strategies, organizations can improve performance, strengthen compliance, and enhance user experience. The blog also covers legacy mobile app modernization, industry use cases, […]

Enterprise AI Strategy & Adoption: Step-by-Step Implementation Guide

Summary: In today’s fast-paced business environment, enterprise AI is no longer just a buzzword; it’s become essential. Its potential to reshape operations, enhance decision-making, and drive efficiency is making AI a crucial part of organizational strategies around the globe. In this guide, we’ll explore how businesses can methodically adopt AI, from crafting a solid strategy […]

AI in Healthcare Claims Processing: A Practical Implementation & ROI Guide

Summary: Healthcare claims processing is often slow, manual, and prone to costly errors. This blog breaks down how AI in healthcare helps reduce denials, speed up reimbursements, and improve revenue cycle performance. You’ll see how AI supports each step of the claims process, what benefits providers and insurers can expect, and what it takes to […]

Transforming Legacy Application Modernization with AI and Automation

Summary: Legacy Application Modernization helps businesses upgrade outdated systems while maintaining operational stability. Many enterprises face technical debt, scalability challenges, and security risks that limit growth. By combining AI-driven automation with structured modernization strategies, organizations can improve performance, strengthen compliance, and enhance user experience. The blog also covers legacy mobile app modernization, industry use cases, […]

Enterprise AI Strategy & Adoption: Step-by-Step Implementation Guide

Summary: In today’s fast-paced business environment, enterprise AI is no longer just a buzzword; it’s become essential. Its potential to reshape operations, enhance decision-making, and drive efficiency is making AI a crucial part of organizational strategies around the globe. In this guide, we’ll explore how businesses can methodically adopt AI, from crafting a solid strategy […]

AI in Healthcare Claims Processing: A Practical Implementation & ROI Guide

Summary: Healthcare claims processing is often slow, manual, and prone to costly errors. This blog breaks down how AI in healthcare helps reduce denials, speed up reimbursements, and improve revenue cycle performance. You’ll see how AI supports each step of the claims process, what benefits providers and insurers can expect, and what it takes to […]

0

+0

+0

+0

+Committed Delivery Leads To Client Satisfaction

Client Testimonials that keep our expert's spirits highly motivated to deliver extraordinary solutions.