Summary

Responsible AI refers to the design, development, and deployment of the best AI systems that are safe, fair, transparent, and accountable. This guide explains the core principles of responsible AI, governance models, practical development practices, and sector-specific considerations (for example, responsible AI in healthcare). It compares prevailing industry approaches (Microsoft, SAP, ISO guidance) and offers actionable steps your team can apply today. The Intellify’s pragmatic checklist at the end gives a ready-to-run roadmap for implementation.

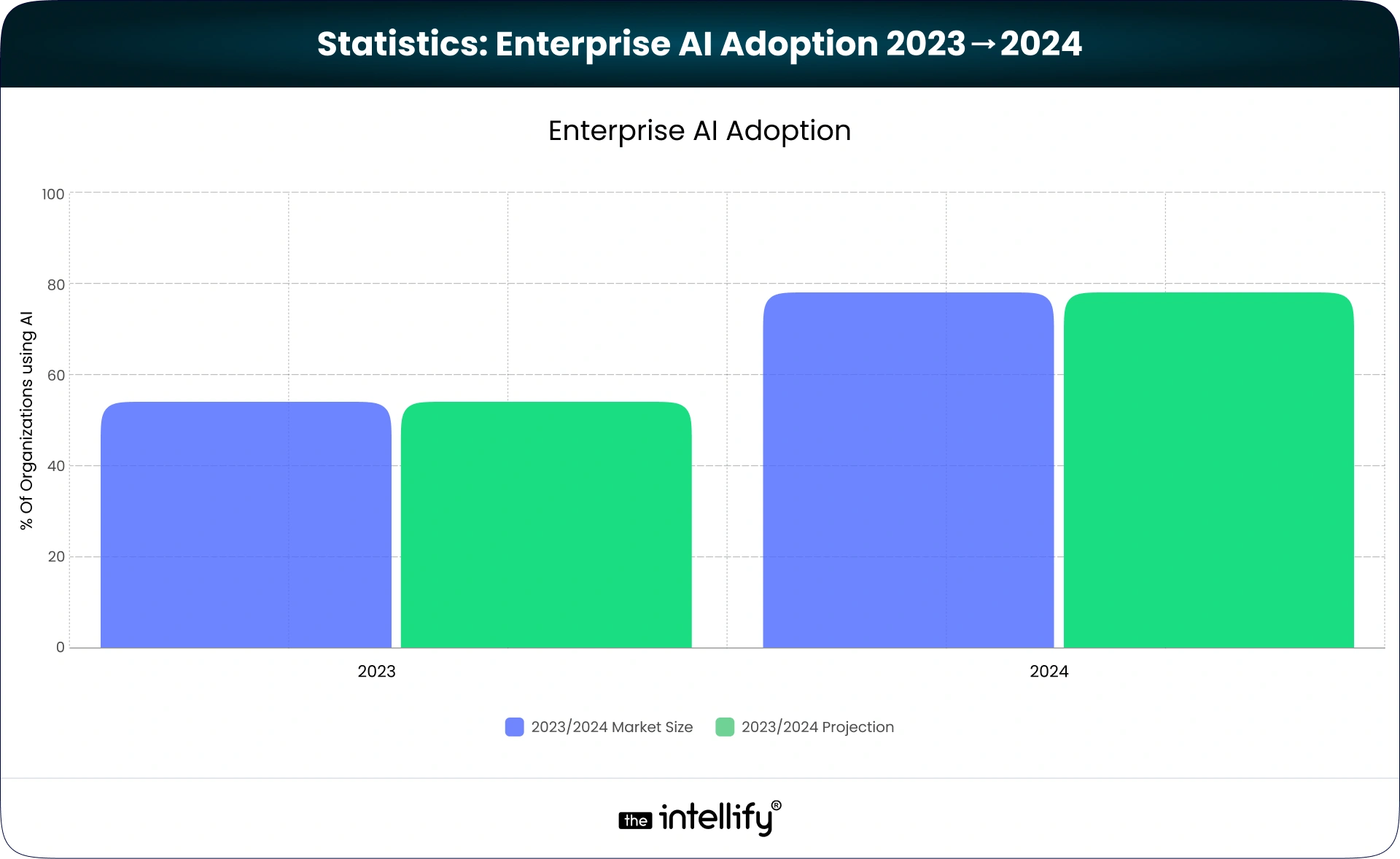

Global Statistics Report

- 78% of organisations reported using AI in at least one business function in 2024.

- AI adoption accelerated from 55% to 78% in the space of a year, showing fast enterprise uptake and the urgent need for governance.

- In 2025 surveys, a large majority of executives acknowledge AI risks and the value of ethical guidelines; however, a substantial governance gap remains across organisations.

- Leading vendors (Microsoft, SAP, ISO guidance) now publish responsible AI frameworks covering fairness, transparency, privacy, and accountability; these form the baseline for enterprise programs.

Introduction: What is responsible AI, and why does it matter

As AI transitions from experiments to mission-critical systems, the question “what is responsible AI?” has shifted from an academic debate to a board-level priority. Responsible AI is not a single technology; it’s a cross-functional set of principles, governance, engineering practices, and ongoing monitoring that ensures AI systems deliver benefits while minimising harms (bias, privacy invasion, safety failures, opacity). The increasing adoption of AI (see statistics above) makes responsible AI governance essential for ensuring legal compliance, maintaining user trust, and ensuring business continuity.

Core responsible AI principles

Every robust, responsible AI program is built on a few shared principles. Different vendors use slightly different wording (Microsoft, SAP, ISO), but the core set is consistent:

- Fairness: Avoid discriminatory outcomes and ensure equitable treatment.

- Transparency & Explainability: Make how models reach decisions interpretable to stakeholders.

- Privacy & Security: Protect personal and sensitive data used or generated by AI.

- Accountability: Assign clear roles and processes for auditing and remediation.

- Reliability & Safety: Ensure models behave as intended across expected and edge cases.

These responsible AI principles form the checklist organizations use when designing AI systems and policies.

Function of responsible AI

The function of responsible AI is practical and threefold:

- Risk reduction: Identify, quantify, and mitigate harms (legal, reputational, operational).

- Trust building: Give users, customers, and regulators confidence that AI systems act appropriately.

- Value protection & enhancement: Ensure AI-driven products deliver sustainable business value without hidden costs from failures or lawsuits.

Together, these functions convert abstract ethics into measurable program goals: bias metrics, logging and audit trails, consent flows, incident response playbooks, and SLAs for model performance drift.

Responsible AI governance: structures that work

Good governance turns principles into action. A responsible AI governance model typically includes:

- Executive sponsorship (C-level owner) to align AI risk with business strategy.

- Cross-functional AI ethics board (legal, security, product, ML engineers, domain experts) for policy and case review.

- Clear policies & standards: Coding standards, data handling rules, model documentation templates (model cards, datasheets).

- Operational controls: Model registry, pre-deployment checks, automated fairness and robustness tests, privacy-preserving pipelines.

- Continuous monitoring & audit: Production monitoring for drift, fairness regression, and security anomalies.

When implemented, responsible AI governance is the backbone that lets teams scale AI while staying compliant and trustworthy. Microsoft, SAP, and ISO guidance are good references for governance components and templates.

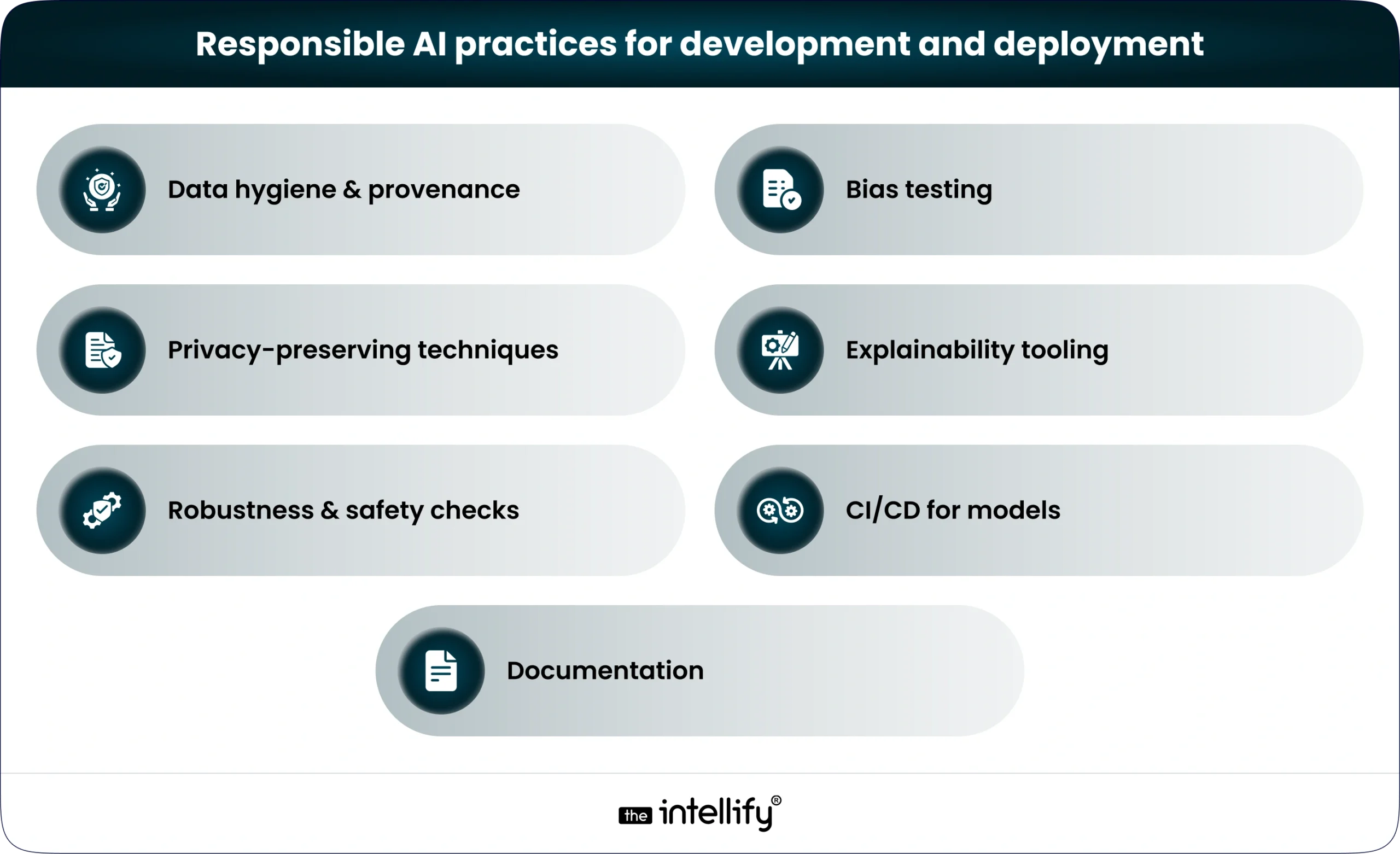

Responsible AI practices for development and deployment

Practical engineering practices make responsible AI real:

- Data hygiene & provenance: track sources, label quality, and lineage. Use data versioning and immutable audit logs.

- Bias testing: run both group-level and intersectional fairness tests during training and pre-production.

- Privacy-preserving techniques: apply differential privacy, federated learning, or secure enclaves where required.

- Explainability tooling: integrate local (LIME/SHAP-like) and global explainability reports into model cards.

- Robustness & safety checks: adversarial testing, stress testing on edge inputs, and scenario simulations.

- CI/CD for models: include responsible checks in pipelines (automated fairness checks, threshold gating, approval workflows).

- Documentation: produce actionable model cards, datasheets for datasets, and decision-logic summaries for stakeholders.

These practices are compatible with cloud-native deployments across major vendors (AWS, Microsoft Azure, Google Cloud) that provide frameworks and toolsets to operationalize them.

Responsible AI solutions & vendor approaches

Large platform vendors now offer best AI tools and guidance to implement responsible AI at scale:

- Microsoft: publishes responsible AI principles, a Responsible AI Standard, and Azure tooling for model interpretability and governance.

- Google: provides fairness and explainability tools, model cards guidance, and Cloud AI governance features (Vertex AI features and policies).

- AWS: offers Security, data-protection building blocks, and partner solutions for model monitoring and compliance.

- Open frameworks: ISO and other standards bodies provide high-level ethics frameworks and audit guidance for cross-border consistency.

When selecting a solution, evaluate provider alignment with your industry’s regulatory needs, integration ease, and whether their tooling supports your governance model (e.g., model registry, drift monitoring, and Explainability).

Responsible AI in healthcare

Healthcare is a high-stakes domain where responsible AI matters most:

- Patient safety: models that drive diagnosis, triage, or treatment must meet clinical-validation standards and be continuously monitored for performance drift across demographics.

- Regulatory compliance: HIPAA (US), GDPR (EU), and local regulations require strict data handling, consent, and Explainability for clinical uses.

- Clinical Explainability: clinicians need interpretable outputs (not black-box scores) and human-in-the-loop workflows for final decisions.

- Audit trails & provenance: tracking dataset versions, model updates, and decision logs is essential for clinical audits and malpractice defense.

Designing responsible AI in healthcare requires clinical partners, thorough validation studies, and a governance model that includes medical ethics oversight.

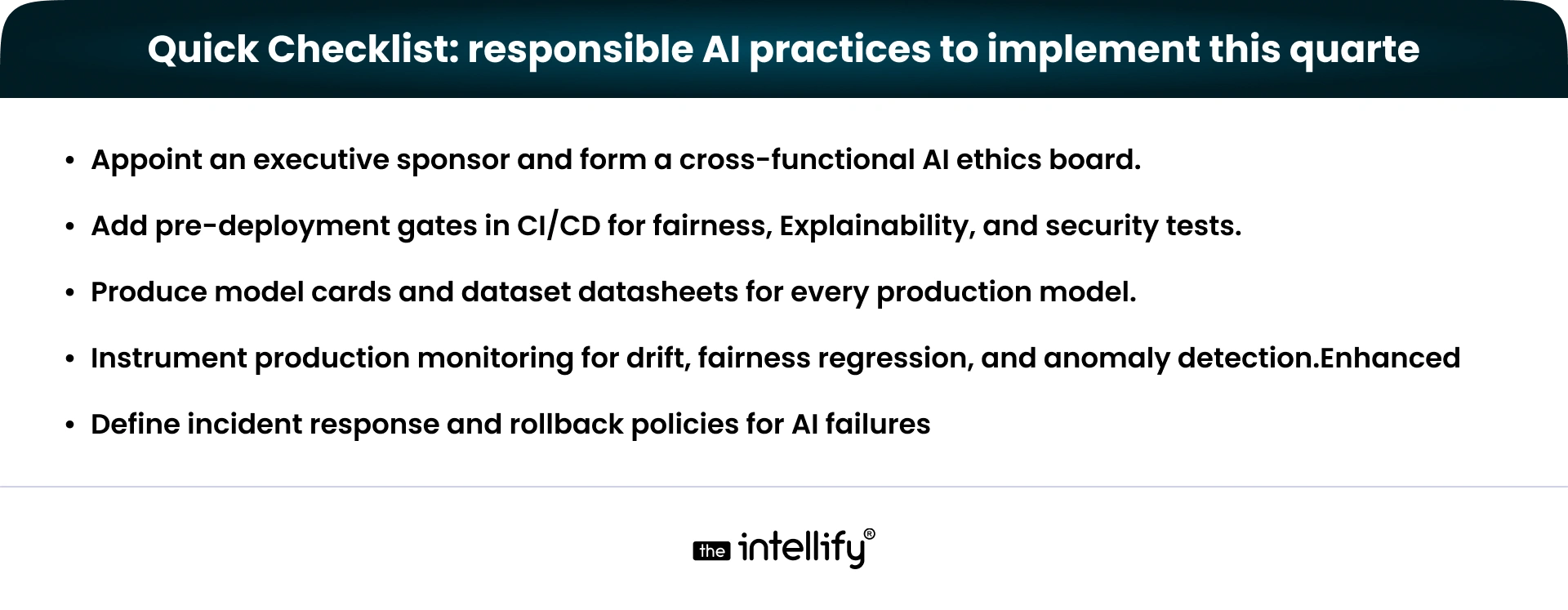

Quick checklist: responsible AI practices to implement this quarter

Why choose TheIntellify for responsible AI implementation

At TheIntellify, we build practical, responsible AI programs that translate principles into production-ready systems. Our approach focuses on measurable outcomes and long-term governance. Key trustworthy points about our experience:

- Cross-functional programs: We design governance that ties product, legal, and ML teams into a single operating rhythm (policy → tests → monitoring).

- Vendor-neutral engineering: Our solutions integrate with AWS, Azure, and Google Cloud (so your AWS responsible AI or Microsoft responsible AI plans are both supported).

- Domain-aware validation: For regulated sectors (healthcare, finance), we embed domain experts into model validation and documentation.

- Practical automation: We codify fairness, Explainability, and privacy checks into CI/CD, reducing manual review cycles.

- Transparent reporting: We deliver dashboards and audit artifacts (model cards, decision logs) that stakeholders and auditors can rely on.

(If you prefer a version tailored to your company name or specific case studies, we can adapt the messaging to reflect actual client projects.)

Common pitfalls and how to avoid them

- Treating governance as paperwork: Avoid checklists without enforcement. Automate checks and tie to deployment gates.

- Ignoring production drift: Models degrade; monitoring is as important as initial validation.

- Not involving domain experts: Technical teams alone miss context-specific harms.

- Single-metric thinking: Fairness, accuracy, and privacy often trade off; optimize across multiple objectives.

- Overlooking supply-chain risks: Third-party models and datasets require their due diligence.

Next steps: roadmap for teams

- Month 0–1: Define principles, appoint a sponsor, and create an AI ethics board.

- Month 1–3: Inventory models & datasets, start model cards, implement basic monitoring.

- Month 3–6: Add automated fairness and robustness tests into CI/CD; privacy-preserving pipelines.

- Month 6+: Continuous audits, external review, alignment to evolving regulatory frameworks.

Conclusion: Responsible AI is practical, measurable, and urgent

“What is responsible AI?” It’s a pragmatic program combining principles, governance, engineering, and monitoring to ensure AI delivers value safely and fairly. With AI adoption accelerating across industries, the time to act is now: convert principles into automated checks, clear accountability, and transparent documentation. Use the checklist and roadmap above to get started, and lean on established frameworks from Microsoft, ISO, and enterprise vendors as references while tailoring policy to your industry and risk profile.

FAQs

1. What is responsible AI?

A: A practical approach to designing, developing, and deploying AI that’s safe, fair, transparent, and accountable, ensuring AI benefits users while minimizing harms.

2. What are the core responsible AI principles?

A: Fairness, transparency/explainability, privacy/security, accountability, and reliability, the baseline for any responsible AI program.

3. How to implement responsible AI governance in an enterprise?

A: Start with executive sponsorship, form a cross-functional ethics board, create model/data inventories, add CI/CD gates for fairness/tests, and monitor models in production.

4. What is the function of responsible AI in product development?

A: To reduce legal/reputational risk, build user trust, and ensure models deliver reliable business value through testing, documentation, and monitoring.

5. What are the must-have responsible AI practices for developers?

A: Data provenance, bias testing, explainability (model cards), privacy techniques (DPIA/differential privacy), and automated pre-deployment checks.