Summary

This guide explores how AI and digital twin technology are transforming logistics. We cover definitions, market trends, core applications (predictive analytics, autonomous fleets, AI chatbots), and cross-industry digital twin use cases (supply chain, warehouses, healthcare, construction). We highlight real-world examples (e.g., Amazon Scout, TuSimple, DHL, Maersk) and provide key statistics (AI in logistics market, digital twin market, adoption rates). Finally, we outline a roadmap for U.S. logistics leaders to adopt AI-driven solutions, ensuring competitive advantage in an era of e-commerce growth and supply chain disruption.

Introduction

Logistics today faces unprecedented complexity: surging e-commerce demand, volatile supply chains, labor shortages, and sustainability pressures. Shippers and logistics providers cite cost management and driver/warehouse labor shortages as top challenges. To thrive, many firms are turning to advanced technology. Indeed, a McKinsey survey found 87% of shippers have sustained or increased tech investment since 2020, and 93% plan to keep doing so. Key innovations at the frontier include artificial intelligence (AI) and digital twinning.

These technologies promise smarter forecasting, real-time visibility, and “virtual prototyping” of supply chains and facilities. By integrating AI and digital twin models, companies can simulate scenarios (e.g, “what-if” disruptions), optimize routes and layouts, and even automate laborious tasks, all without interrupting real operations. The result can be measurable gains in efficiency, cost savings, and resilience across transportation and warehousing (often 10 40%+ improvement). This guide unpacks AI in logistics and digital twin technology, defining each, reviewing market trends, and showing how to implement them for rapid ROI.

What is AI in Logistics and Transportation?

AI in logistics refers to using machine learning, computer vision, robotics, and related techniques to optimize supply chain and transportation operations. At its core, AI ingests vast data (inventory levels, delivery histories, traffic, weather, IoT sensor feeds, etc.) to make smarter predictions and decisions. For example, AI predictive analytics can forecast customer demand or transit delays before they happen.

Route planning software uses AI to continuously re-route trucks around traffic jams. Warehouse systems apply vision-based AI to scan and track inventory or guide robots and workers. Even customer service uses AI: chatbots and virtual assistants handle routine inquiries about shipments or deliveries.

Broadly, companies embed AI tools, from demand-forecasting algorithms to robotic process automation (RPA, transforming traditional supply chains into “smart, adaptive” networks. AI helps logistics managers predict transit times and carrier delays, optimize inventory and replenishment, and even flag anomalies (like potential out-of-stock items or suspicious supply issues). Autonomous fleets of trucks and delivery robots also fall under this umbrella (more on that below). In short, AI in logistics spans any application where software learns from data to automate decisions, improve efficiency, or provide new capabilities.

For instance, Oracle notes that “AI is used in logistics for a variety of purposes, such as forecasting demand, planning shipments, optimizing warehousing, and gaining step-by-step visibility into routes, cargo conditions, and potential disruptions”. By applying AI-driven models to historical delivery data and real-time inputs (like GPS and weather), firms can identify at-risk shipments early and switch routes or carriers before delays occur.

Companies that adopt these AI solutions see concrete benefits: McKinsey reports that early adopters of AI-powered supply chain software have about 15% lower logistics costs and 35% better inventory levels than their peers. Moreover, AI adoption is booming: a 2024 survey found 97% of manufacturing CEOs plan to use AI in operations within two years. In short, AI in logistics and transportation enables sharper forecasting, dynamic optimization, and automation of complex processes across the supply chain.

What is a Digital Twin?

A digital twin is a real-time virtual model of a physical object, system, or environment. In logistics, a digital twin can replicate anything from a single vehicle or warehouse to an entire supply chain network. The twin is fed live data (via IoT sensors, RFID, GPS, ERP, etc.) so that it “mirrors” the physical entity’s current state.

This allows analysis, simulation, and optimization on the digital copy without disrupting the real-world operation. For example, a warehouse digital twin might consist of a 3D model of the facility with every rack, aisle, and robot, updated in real time. Managers can then simulate inventory movements, test new layouts, or run “what-if” scenarios (like sudden demand surges) entirely in the virtual twin before enacting changes on the floor.

Technically, digital twins leverage IoT sensors and cloud/AI to deliver insights: data from machines, vehicles, buildings, or shipments is collected and used to run physics-based or statistical models. The DHL Logistics Trend Radar defines digital twins as “virtual models that accurately mirror the real-time conditions and behaviors of physical objects or processes”.

This includes everything from an individual machine’s “asset twin” enabling predictive maintenance, to an “end-to-end twin” covering an entire supply chain for advanced planning. In manufacturing, McKinsey describes digital twins as “real-time virtual renderings of the physical world” that let companies simulate “what-if” production changes.

A simple example: Amazon’s warehouse team scans an existing fulfillment center with LIDAR to produce a dense point cloud of the entire facility, creating a raw digital twin.

The above point-cloud image (captured by Amazon’s LIDAR scanner) shows an actual fulfillment center in 3D. This data forms the basis of a warehouse digital twin. Engineers then align that scan with architectural CAD drawings and enrich it with metadata (like rack IDs or equipment specs) to produce a full 3D model.

The completed 3D model (above) blends the warehouse floor plan (bottom) with the LIDAR-scanned asset data (top). Every shelf, conveyor, and robot is represented. This 3D digital twin can now be used for layout optimization or simulation without touching the physical warehouse.

Digital twin technology thus creates a “single source of truth” for the real-world asset, enabling virtual testing of changes, predictive maintenance, stress-testing supply chains, and even remote equipment control. Importantly, digital twins can be nested: a single product (like a truck) can have its own twin, that links into a factory twin or a distribution network twin, providing visibility from component level up through the entire supply chain.

Market Landscape & Trends

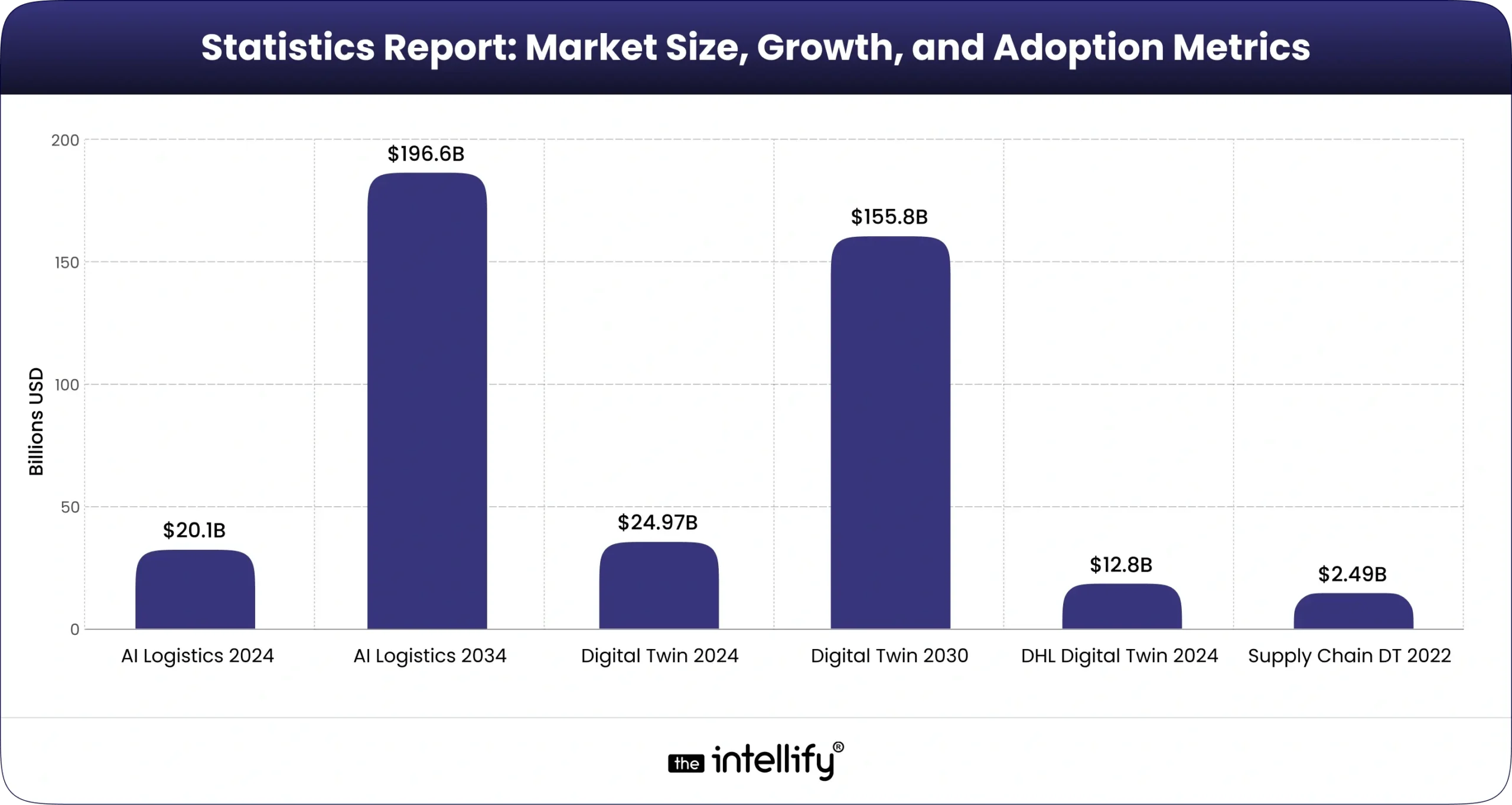

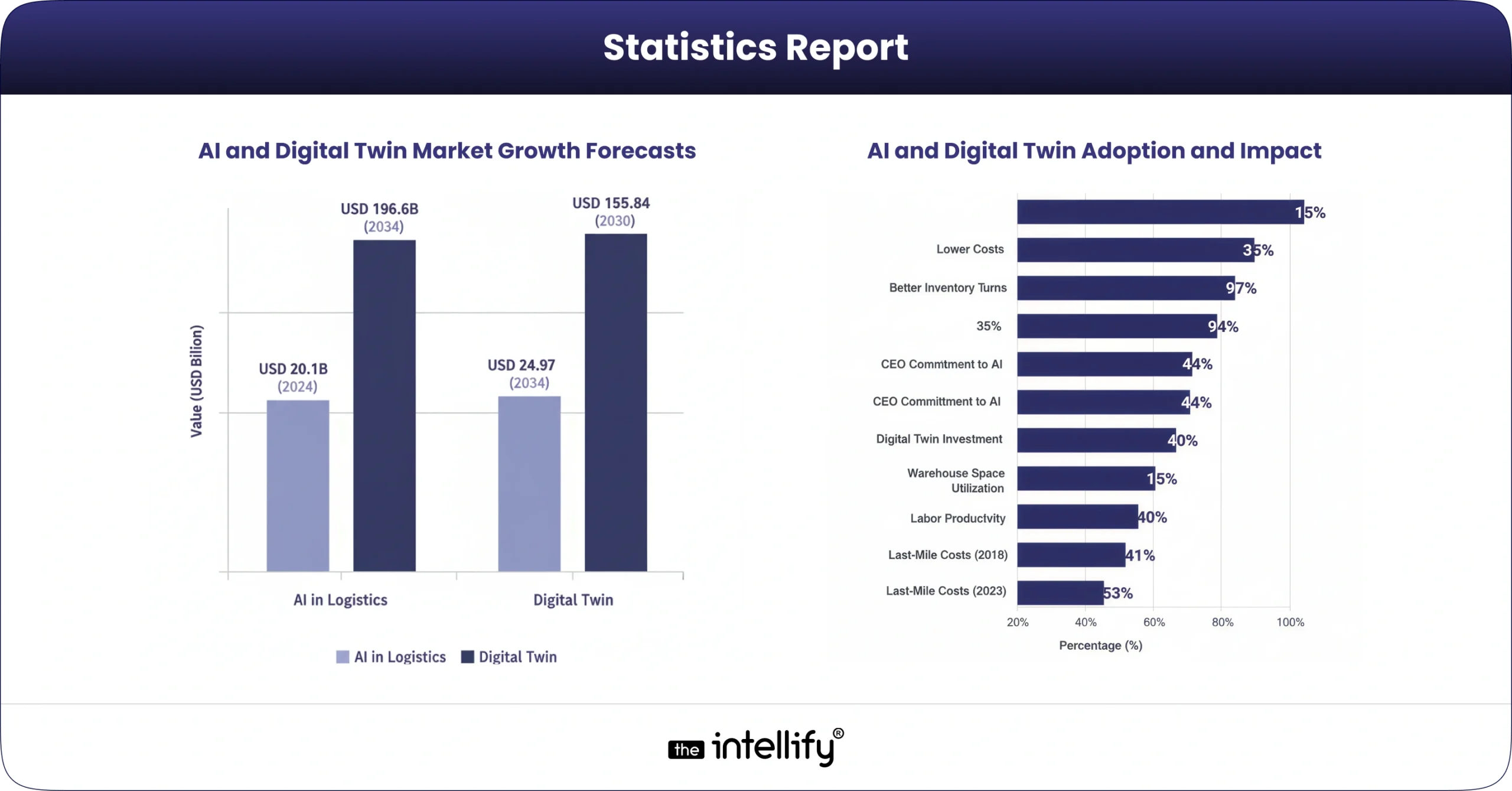

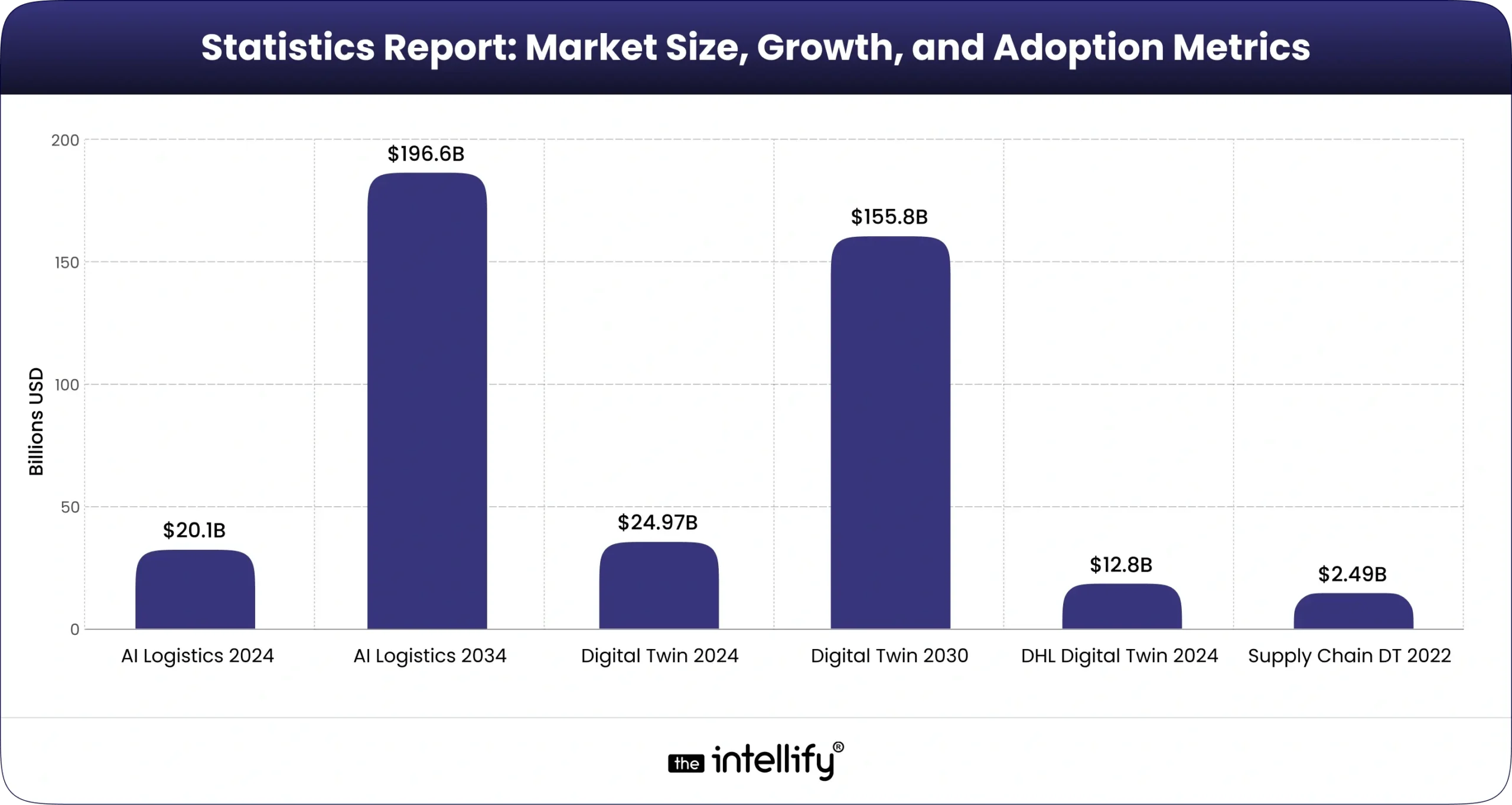

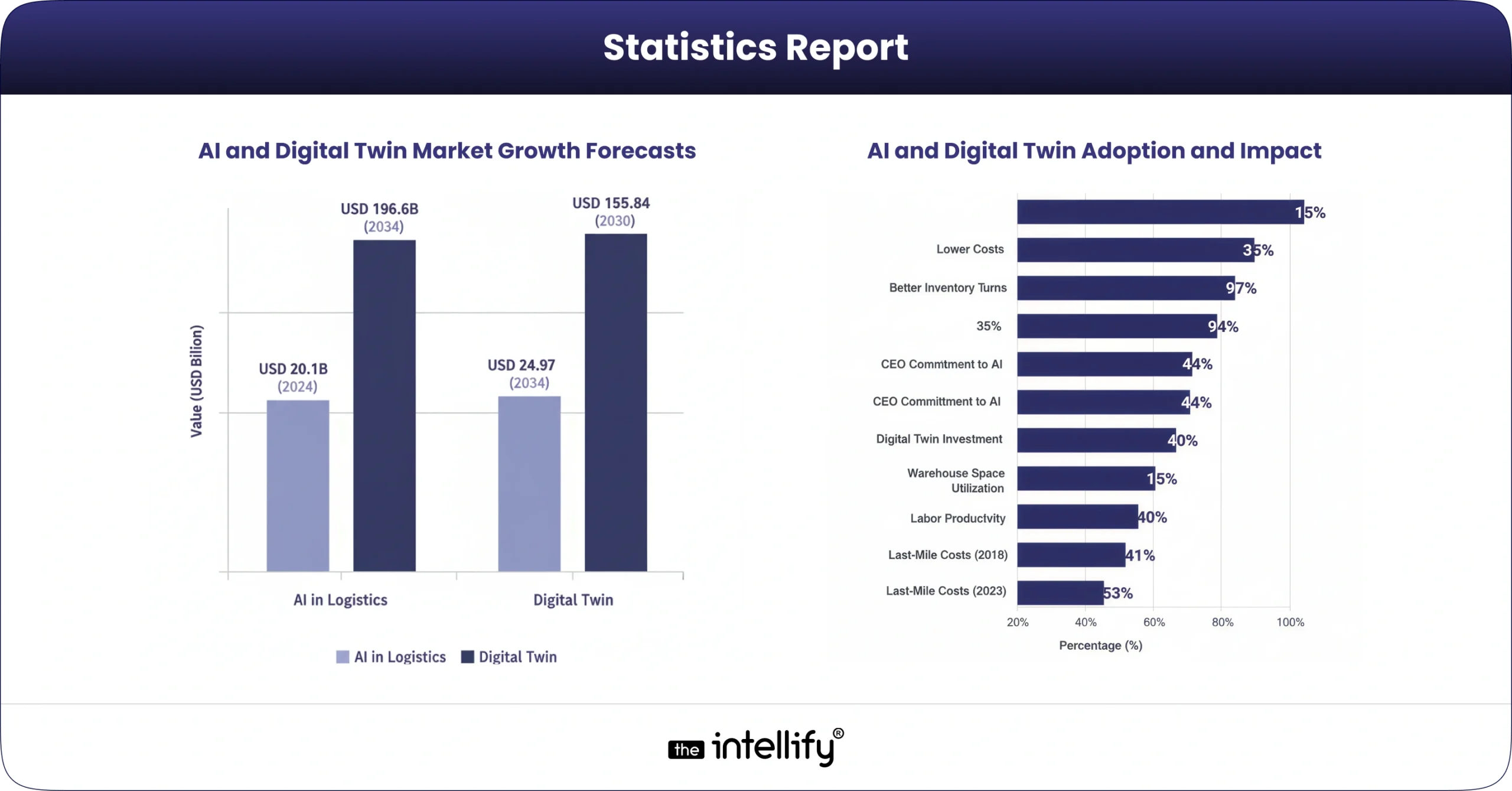

AI in logistics and digital twin markets are both expanding rapidly. According to industry research, the global AI in logistics & supply chain market was about $20.1 billion in 2024, and is projected to skyrocket (a 25.9% CAGR) to roughly $196.6 billion by 2034. Growth drivers include exploding e-commerce volumes, the need for real-time visibility, and new technologies like 5G/IoT enabling smarter warehouses.

For example, GMI Insights reports that real-time visibility demands, e-commerce growth, and the adoption of autonomous vehicles are pushing AI logistics adoption. McKinsey notes that virtually all logistics providers are investing: 87% have maintained or grown tech budgets since 2020, and 93% plan to increase spend on digital tools.

The digital twin market is likewise booming. A Grand View Research report estimates the global digital twin market at about $24.97 billion in 2024, with a 34.2% CAGR pushing it to roughly $155.8 billion by 2030. (North America alone holds about a third of that market today.) Similarly, Maersk forecasts 30,40% annual growth, estimating a $125,$150 billion global market by 2032.

Key factors fueling this include cheaper sensors/IoT, widespread cloud analytics, and the push for automation and resilience. The DHL Logistics Trend Radar also confirms that the digital twin market (valued at ~$12.8B in 2024) is expected to grow at ~40%+ CAGR. Supply chain & manufacturing are early adopters: Grand View notes the supply chain digital twin segment ($2.49B in 2022) growing ~12% annually through 2030.

In short, AI tools and digital twin solutions are moving from niche pilots to mainstream logistics technology. Cloud-based logistics platforms now routinely offer AI planning modules and digital-twin-based simulations. Advanced AI-driven simulations (sometimes called “AI digital twins”) are emerging; for example, some systems combine machine learning models with digital twin physics to provide real-time supply chain forecasts.

As one Forbes/McKinsey analysis notes, investments in cutting-edge tech (robotics, advanced analytics, network digital twins) are the “next frontier” for logistics productivity. In practice, this means logistics leaders are actively evaluating digital twin services (e.g., IoT platforms from AWS, Siemens, etc.) and AI solutions from SAP, Oracle, and others to optimize their networks.

Core Applications in Logistics

Logistics firms are deploying AI and digital twins across several core applications:

Predictive Analytics & Forecasting

At the heart of AI in logistics is predictive analytics. By training models on historical shipping, inventory, and customer data, companies can forecast demand spikes or supply bottlenecks before they occur. This lets managers adjust inventory and staffing proactively. For example, an AI model might analyze seasonality, supplier lead times, and transit delays to anticipate stockouts and trigger preemptive restocking. Similarly, predictive maintenance is a key use case: IoT sensors on forklifts or cargo trackers feed live data into digital twins and AI models, enabling alerts for failures (e.g., a truck engine showing abnormal vibration).

In manufacturing, digital twins of factory machines have long been used for this purpose. In logistics, a supply chain digital twin can integrate data from factories, ports, and warehouses; AI then identifies anomalies (a delayed vessel, an earthquake) and simulates mitigation scenarios. Grand View notes that when machine learning is added, digital twins can “forecast demand variations, adjust inventory levels, and recommend the best transit routes” across an entire supply network.

Autonomous AI Fleets (Self-Driving Trucks & Bots)

Autonomous vehicles are a high-profile application of AI in logistics. Driverless trucks, vans, and robots can operate 24/7 and reduce labor costs. For example, Amazon developed Amazon Scout, a small six-wheeled autonomous delivery robot for last-mile package delivery. Scout was designed to navigate sidewalks at walking pace, safely transporting packages to customers without a driver. (Amazon field-tested Scout in Washington state, with delivery bots initially accompanied by an employee, aiming to eventually run fully autonomously.)

In long-haul transport, companies like TuSimple have demonstrated Level-4 autonomous trucks. In late 2021, TuSimple completed an 80-mile trial in Arizona without a human on board. This truck’s AI system handled highways, ramps, and traffic signals entirely on its own. These examples show how autonomous AI (self-driving) fleets can extend human capacity, running through the night and avoiding fatigue. In the future, such fleets might network with digital twins: each autonomous vehicle could have a virtual “twin” in a central system, allowing operations centers to simulate and optimize routes for the entire fleet in real time.

Conversational AI (Logistics Chatbots and Assistants)

Modern AI also changes the human interface. Chatbots and virtual assistants in logistics can answer customers’ package status queries 24/7 or help dispatchers plan routes. AI chatbots trained on logistics data can handle routine communications (e.g., “Where is my delivery?”) and flag exceptions (damaged goods, delays) for human follow-up. Logistics providers are already using these tools: for example, DHL reports that AI-enabled chatbots can understand customer intent better, automatically upsell or cross-sell services, and significantly cut call-center volume.

Beyond customer chat, AI agents can assist drivers and dispatchers. In a McKinsey study, one last-mile delivery company implemented AI-powered “virtual dispatchers” to help human dispatchers manage drivers. Remarkably, a fleet of 10,000 vehicles saved $30,35 million with just a $2M AI investment, by automating tasks like rerouting drivers around traffic or giving automated roadside help. Such autonomous AI agents, whether a chatbot for customer support or an assistant for warehouse managers, are becoming integral AI use cases in logistics.

Digital Twins in Logistics & Beyond

Digital twin technology is not limited to one part of logistics. It spans numerous use cases across industries:

Supply Chain & Warehouse Twins

The most direct application is in supply chains and distribution centers. Logistics companies are using digital twins to map out entire networks of suppliers, trucks, ports, and DCs. By simulating disruptions (storms, strikes), they can stress-test resilience. For example, DHL notes that virtual supply chain simulations can help identify vulnerabilities without harming real operations.

Warehouses use 3D digital twins to optimize shelf layouts and workflows. In one case, a European retailer created digital twins of over 2,000 stores (including aisles and inventory) to optimize replenishment and shelf stocking. Studies show such digital modeling can boost space utilization by ~15% and labor productivity by up to 40%. Emerging solutions (e.g., AWS IoT TwinMaker or Siemens’ digital twin platforms) allow companies to create these virtual warehouses by combining CAD layouts with sensor data, enabling simulation of traffic flows, picking routes, or even augmented-reality training.

Manufacturing and Construction Twins

In adjacent industries, digital twins are also transforming operations. In manufacturing, nearly every part of a factory can have a twi, from individual machines (“asset twins” for maintenance) to entire production lines. McKinsey notes that 86% of manufacturers see digital twins as applicable, with 44% already implementing them. In construction and building management, “digital twin in construction” is a growing trend.

Digital models of buildings and infrastructure allow architects and engineers to test designs virtually, then track actual sensor data (HVAC, occupancy) post-construction. For instance, builders may create a digital twin of a new office tower to optimize energy use and maintenance schedules. Likewise, urban planning is starting to leverage city-scale twins: smart cities like Singapore have 3D digital models to simulate traffic flows or infrastructure projects before ground-breaking. (This digital twin for urban planning helps city leaders make data-driven decisions on public transit routes, zoning, and utilities.)

Healthcare Digital Twins

The term even extends to life sciences. In healthcare, a “digital twin” can refer to a model of a hospital or even a human body. For example, hospitals are creating digital twins of their facilities and equipment to optimize patient flow and staffing. More ambitiously, some companies are working on digital twins of organs or patients, using patient data to simulate treatments or predict disease progression. (Siemens and Philips have initiatives around “digital patient twins” for personalized medicine.) According to one report, 66% of healthcare executives expect to increase investment in digital twins in the next three years.

Emerging & Hybrid Use Cases

Digital twins are evolving with AI. Some firms are exploring “AI digital twin” concepts, where generative models assist in running simulations or answering questions about the system. For instance, an LLM (large language model) could be trained on logistics data and linked to a supply chain twin, enabling it to answer queries like “If demand rises 20% next month, what should we adjust?” (This touches on the keyword “digital twin in LLM”; though still experimental, it represents how AI and twins converge.) Another novel application is in consumer products: Nestlé has announced plans for digital twins of pet food brands, using AI to simulate and test new formulas (the so-called “digital twin meals” concept).

In all these cases, the core idea is the same: bring data to life in a virtual model. As digital twin technology matures, its use cases range from routine (layout planning, maintenance optimization) to visionary (autonomous “self-driving” factories or supply chains). Companies offering digital twin services and solutions (from startups to industry giants) are addressing this broad spectrum of needs, often bundling IoT, simulation, and analytics into integrated platforms.

The benefits can include faster decision cycles, enhanced collaboration, and reduced risk (since changes can be tested in silico first). Indeed, by simulating scenarios like supply delays or demand surges, firms can make strategic adjustments proactively, turning data into resilience.

Real-World Case Studies

DHL

As a global logistics leader, DHL is both observing and applying AI and digital twin tech. DHL’s Innovation Radar notes that large players are “leveraging digital twins to enhance efficiency, resilience, and sustainability”. On the AI side, DHL uses AI chatbots to improve customer service and dynamic route optimization. A DHL Supply Chain leader highlights that partners like Oracle are chosen partly for their AI potential.

Indeed, DHL Supply Chain implemented Oracle Fusion Cloud ERP across 50+ countries, enabling unified data and AI-driven insights in finance and operations. For example, DHL now processes 3+ million invoices/year using Oracle’s AI-powered document recognition, freeing finance staff for strategic tasks. DHL is also piloting digital twins: for instance, its partner dm-drogerie markt uses store twins for inventory management, and DHL itself runs supply-chain simulations to stress-test networks. Overall, DHL’s case exemplifies an integrated approach: standardize on cloud platforms (Oracle) that natively support AI, while exploring digital twins for visibility and optimization.

Maersk

The shipping and logistics giant Maersk highlights digital twins as a top trend. Maersk describes how combining IoT with AI simulations creates “virtual replicas” for real-time optimization. Their trend map notes that digital twin adoption is still early (“innovative companies only”), but projects a major impact. Maersk estimates global twin markets growing 30,40% annually, reaching ~$150B by 2032.

In practice, Maersk has run pilots with cargo-vessel twins (for predictive maintenance) and terminal operation simulations (optimizing container flow). Their materials also emphasize twin applications in warehouse layout and supply chain planning. This case shows how a traditional transport firm is co-opting digital twin solutions to solve age-old logistics problems.

Tesla

Tesla is best known for its electric vehicles and Autopilot, but its story also touches logistics. Tesla’s vertical integration (in-house battery production, giant Gigafactories) is itself a logistics strategy. The company has pushed automation hard, though famously learning that “over-automation” can backfire. (During early Model 3 ramp-up, Tesla had to reintroduce humans after automated systems failed.)

Today, Tesla’s factories run AI-driven robotics but also monitor operations with advanced software. In logistics, Tesla is developing the electric Tesla Semi truck (with planned self-driving capability) to haul freight. Moreover, Tesla’s direct-to-consumer sales model relies on data integration: customers can track production and delivery online, a kind of digital twin of their own car’s journey. Thus, Tesla’s case is about pioneering new autonomous vehicle tech (part of autonomous AI fleets) and using data/AI throughout its supply chain and service network.

R-CON (Reality Capture Network)

R-CON is an industry consortium that holds events on digital twin innovation. For example, “R-CON: Digital Twins 2025” brings together experts from space, defense, construction, and logistics to share best practices. While not a single company case, R-CON panels highlight cross-sector lessons. For instance, space agencies use digital twins to simulate satellites and missions, then apply those learnings to supply chain resiliency on Earth. In logistics terms, R-CON discussions underscore that digital twin technology often starts in one field (e.g., aerospace) and quickly spills over to others (e.g, urban planning, warehouses). By attending such forums, logistics professionals discover novel use cases, from digital twins of ports and rail yards to AI-driven command centers.

Oracle

Oracle itself is a major case: its cloud ERP and supply chain products are now infused with AI and digital twin features. One illustration is how Oracle’s technology helped DHL (above). Beyond that, Oracle offers AI-based planning and IoT-driven twin capabilities in its SCM Cloud. Logistics customers can use Oracle’s tools for real-time demand forecasting and digital replica modeling. Oracle notes that with AI, users can embed augmented reality and digital simulations into logistics operations (e.g., AR overlays for warehouse pickers, or cloud-based twins for asset monitoring). In sum, Oracle’s role is both as a technology provider (enabling digital transformation) and as a collaborator with logistics companies to drive AI adoption.

These case studies show that leading organizations are not just experimenting; they are embedding AI and digital twin tech into core operations. Whether it’s autonomous vehicles on the road, AI agents in the control tower, or comprehensive digital twins of supply networks, these examples illustrate the real-world ROI: lower costs, faster deliveries, and better risk management.

Benefits, Use Cases, Challenges & Opportunities

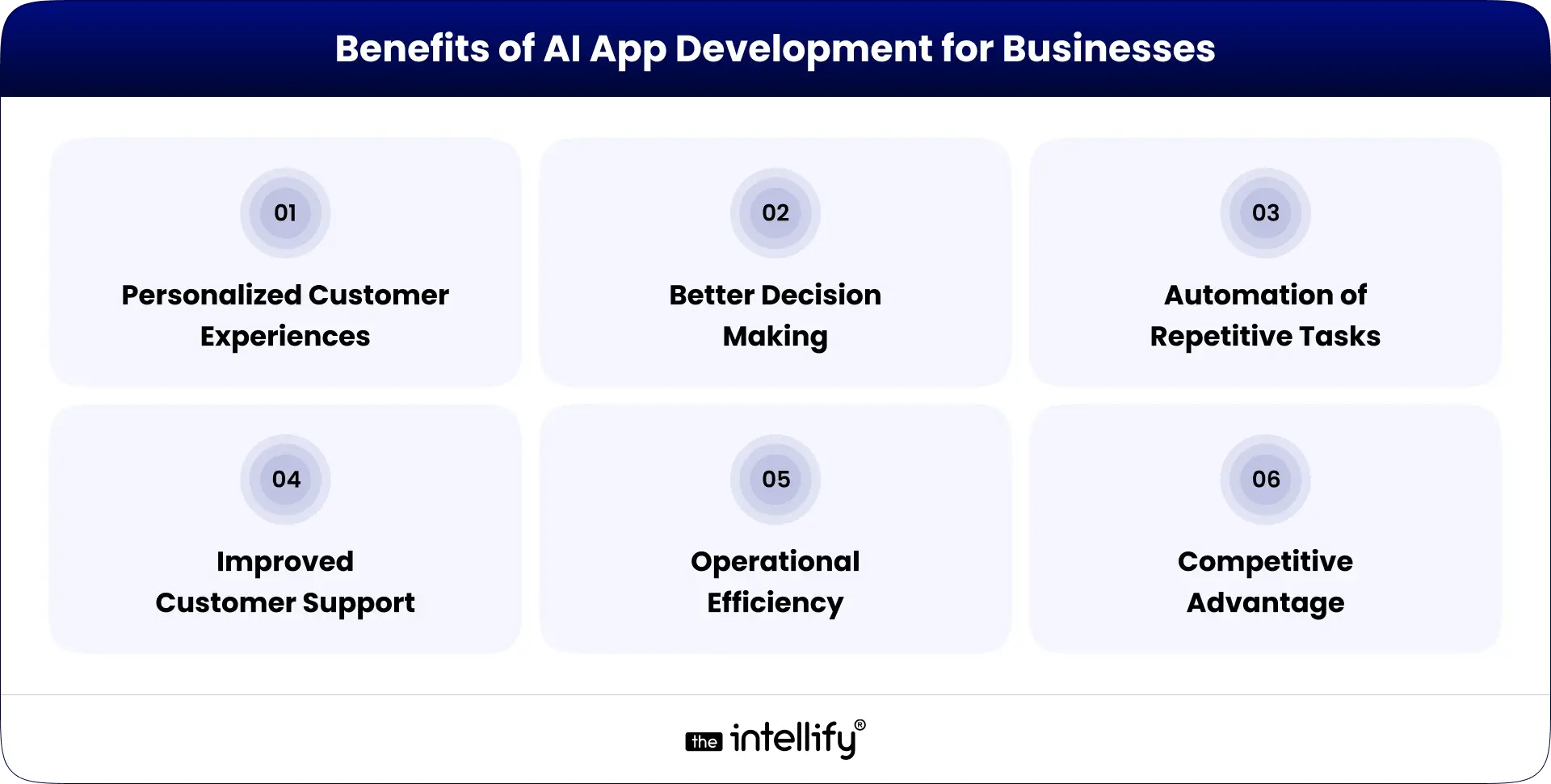

Benefits:

AI and digital twins jointly offer powerful advantages for logistics:

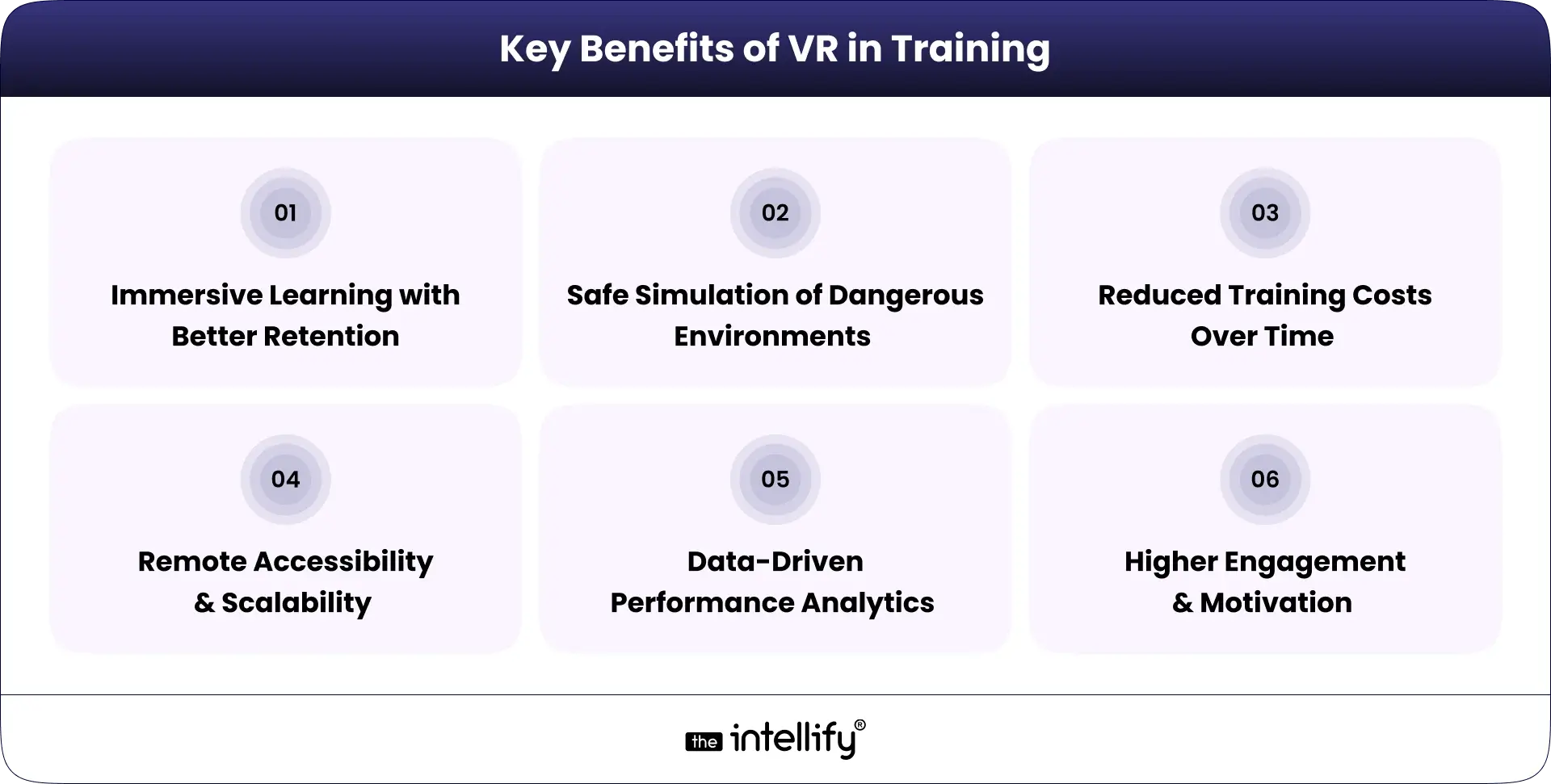

Improved Efficiency & Cost Savings

AI algorithms automate routing, inventory allocation, and repetitive tasks, shrinking labor and transportation costs. Studies find AI adopters can cut logistics costs by ~15% and drastically reduce forecasting errors (up to 50% less forecasting error, meaning 65% fewer lost sales). Digital twins further boost efficiency by enabling simulations. For example, a factory twin allowed an industrial client to redesign production schedules and cut overtime by 5,7% monthly. Overall, McKinsey estimates digital logistics tools often yield 10,20% performance gains immediately and 20- 40% gains over a few years. Such tools can also derisk costs: building resilience via simulation can protect up to 60% of EBITDA in disruptions.

Greater Visibility & Resilience

Real-time AI dashboards and twins give full visibility across the chain. Logistics managers can stress-test entire networks virtually, for example, running a hurricane scenario through a digital twin of supply chains to spot bottlenecks. This leads to smarter contingency planning. Toyota, for instance, has cited digital twin simulation as key to quickly rerouting shipments during sudden port closures. In short, better data and models let companies react faster and avoid blind spots.

Enhanced Customer Experience

AI chatbots and better tracking improve service. Customers get faster answers via conversational AI, while behind the scenes, AI ensures more reliable deliveries. DHL notes that AI-driven chatbots can even upsell services or cross-sell (e.g, offering expedited shipping) based on detected customer intent. Additionally, personalized supply chain offerings (like guaranteed delivery windows) become feasible when AI optimizes each order.

Innovation Enablement

Integrating AI and digital twins opens new business models. Logistics firms can, for example, offer data-driven consulting: using digital twin analysis to advise clients on network design. Some shipping companies sell access to their predictive analytics as a service. Moreover, the synergy of AI + twin tech enables futuristic concepts like autonomous supply chains where AI agents coordinate fleets based on digital twin forecasts.

Use Cases:

Key use cases include:

- Demand Forecasting: AI models integrated with twin simulations to predict inventory needs.

- Route & Fleet Optimization: Dynamic rerouting with AI, and fully driverless truck convoys.

- Warehouse Automation: Guided vehicles and layout tweaks via twin modeling.

- Predictive Maintenance: Twin-based monitoring of equipment (cranes, trucks) to schedule repairs before failures.

- Customer Service: AI chatbots for shipment tracking, and AI agents for complex queries.

- Risk Simulation: Digital twin “war rooms” that run geopolitical or disaster scenarios on supply chains.

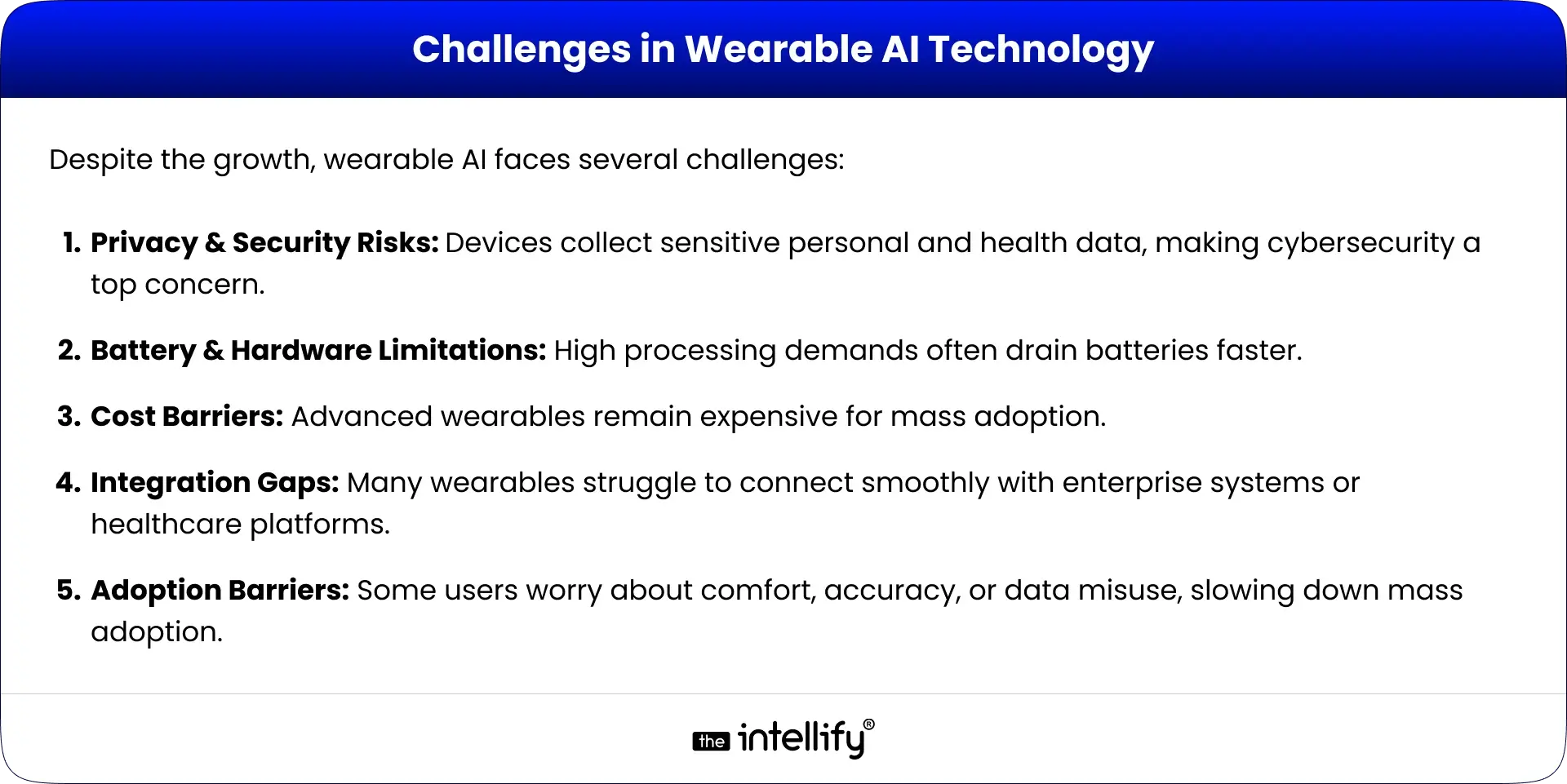

Challenges:

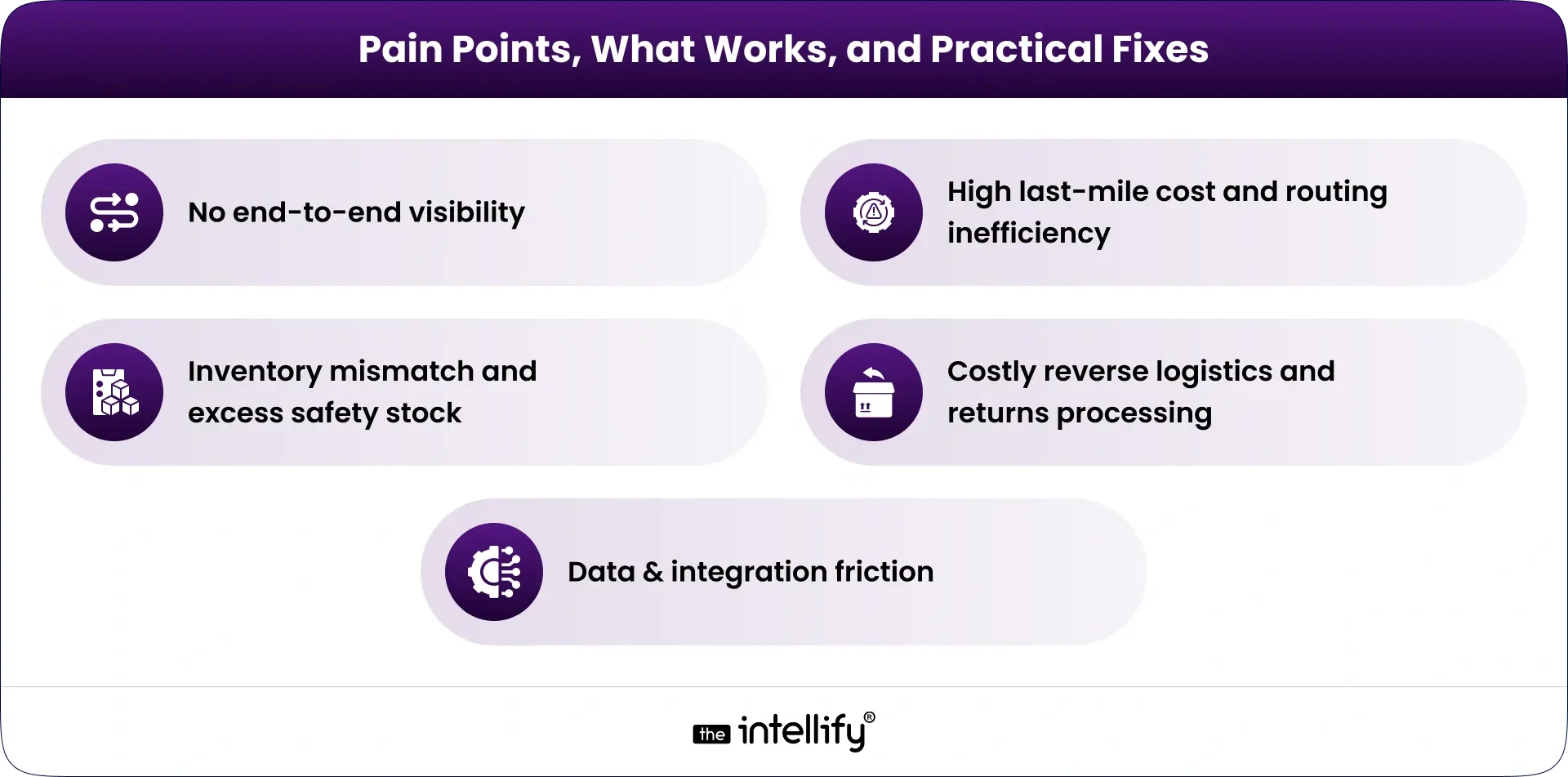

Adoption is not without hurdles. Common obstacles include:

- High Upfront Costs: Implementing AI systems and building digital twins (sensors, computing, integration) requires investment. Many firms worry about ROI.

- Data Quality & Integration: AI and twins need clean, real-time data. Fragmented legacy systems in logistics (multiple ERPs/WMSs) complicate this. In fact, many shippers now juggle 5+ different tech solutions in transport and warehousing. Integrating these data streams is nontrivial.

- Skills & Change Management: New talent (data scientists, IoT specialists) and change processes are needed. Front-line staff and managers must trust and interpret AI outputs.

- Privacy & Security: Logistics data often contains sensitive customer or supplier info. Ensuring AI/twin platforms are secure is critical.

- Technology Maturity: Some AI applications are still emerging. Over-reliance without expert oversight can backfire (as Tesla’s “production hell” hinted).

Opportunities:

Despite challenges, the upside is huge. As AI models (including generative AI) improve, new opportunities emerge:

- Autonomous Agents: AI “agents” that act autonomously (booking shipments, negotiating rates) are on the horizon. McKinsey notes virtual dispatcher agents already saving fleets millions.

- Cross-Industry Solutions: Firms can apply digital twin learnings from other sectors (e.g., Airbus uses twins for aircraft maintenance; similar models could optimize truck fleets). R-CON conferences show how dual-use ideas transfer between defense, telecom, and logistics.

- Sustainability Gains: AI+twin optimization can significantly cut fuel use and emissions. For instance, improved routing is estimated to reduce empty miles (reducing 15% of unladen travel). Customers increasingly reward “green” shippers, so logistics providers can gain market share through such optimization.

- Platform Ecosystems: Major cloud providers (AWS, Azure, Google) and software firms (SAP, Oracle) are bundling AI and twin services. Logistics firms can leverage these existing platforms rather than building from scratch. For example, Oracle’s AI-in-ERP easily feeds into its SCM Cloud to create digital twins of planning processes.

In summary, the benefits of AI and digital twins in logistics are clear: lower costs, higher throughput, better resilience, and new revenue streams. The challenges (cost, data, change) are surmountable with the right strategy. And the opportunities, from fully autonomous fleets to AI-driven global logistics platforms, make this the defining technology wave for 2025 and beyond.

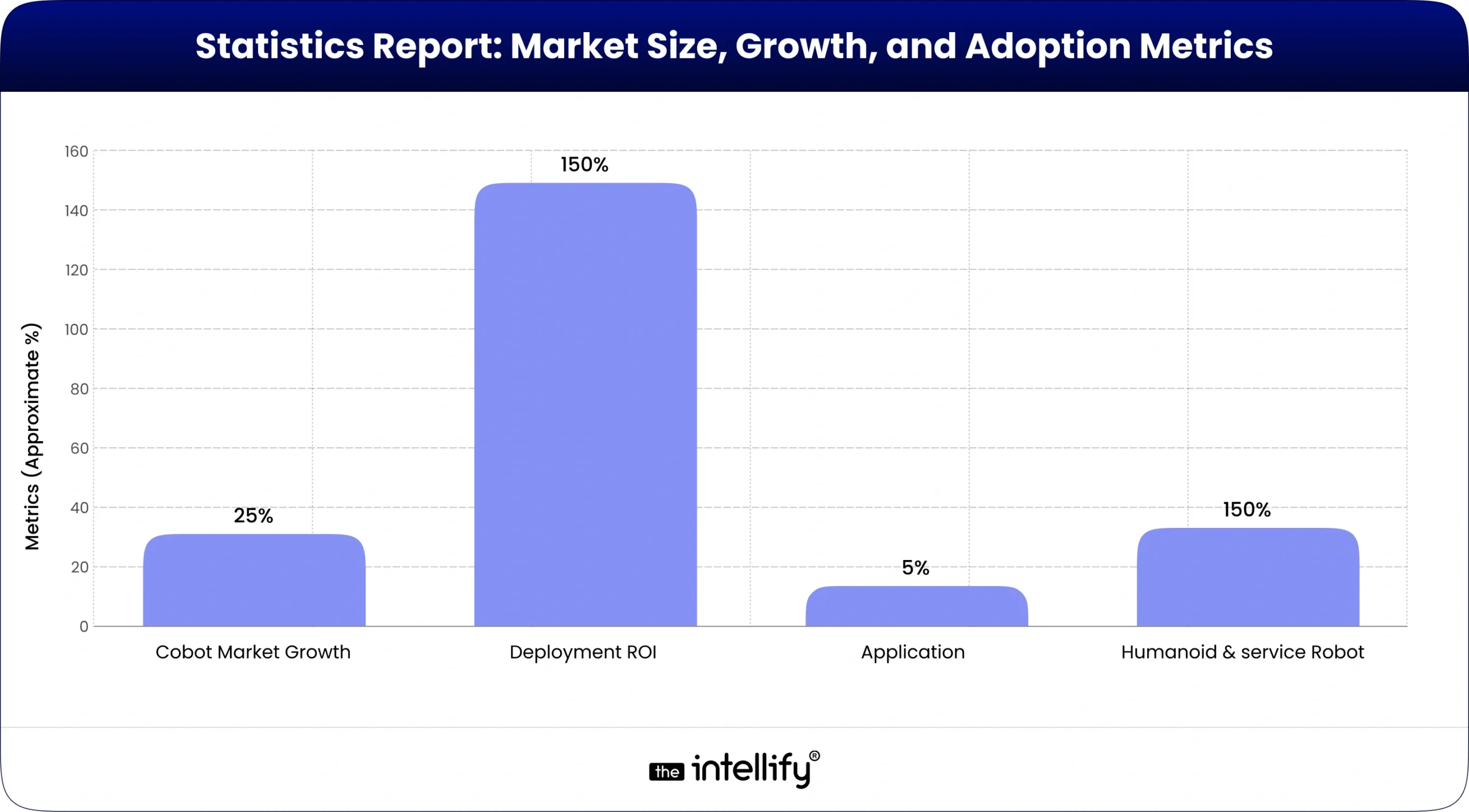

Statistics Report

Key current figures and forecasts for AI and digital twins in logistics:

- AI in Logistics Market: USD 20.1 billion (2024) → ~$196.6 billion (2034) at 25.9% CAGR.

- Digital Twin Market: USD 24.97 billion (2024) → $155.84 billion (2030) at 34.2% CAGR.

- Supply Chain Digital Twin: USD 2.49 billion (2022) with ~12% CAGR (2023,2030).

- AI Adoption Impact: Early AI users in logistics achieve ~15% lower costs and 35% better inventory turns vs. competitors.

- CEO Commitment: 97% of manufacturing/logistics CEOs will be using AI within 2 years.

- Digital Twin Adoption: In manufacturing, 86% of execs see digital twin applicability; 44% have implemented a digital twin.

- Healthcare Investment: 66% of healthcare leaders plan greater digital twin investment in 3 years.

- Last-Mile Costs: Last-mile delivery costs rose from 41% of total shipping costs (2018) to 53% (2023), highlighting room for AI-driven optimization.

- Warehouse Efficiency: Digital twin and 3D visualization can improve space utilization in warehouses by ~15% and boost labor productivity by up to 40%.

These statistics underscore the rapid growth and tangible impact of AI and digital twins in logistics and supply chain operations.

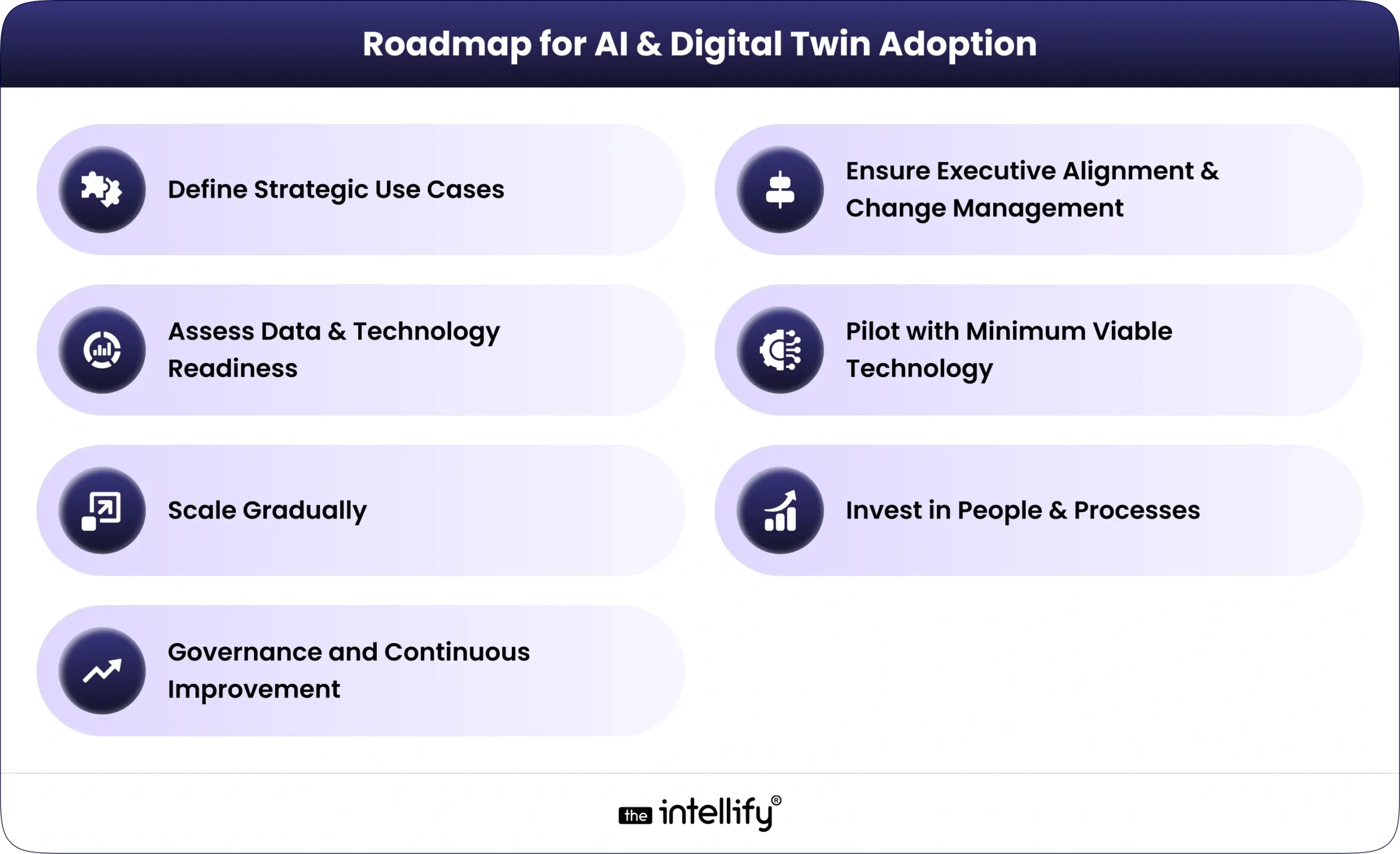

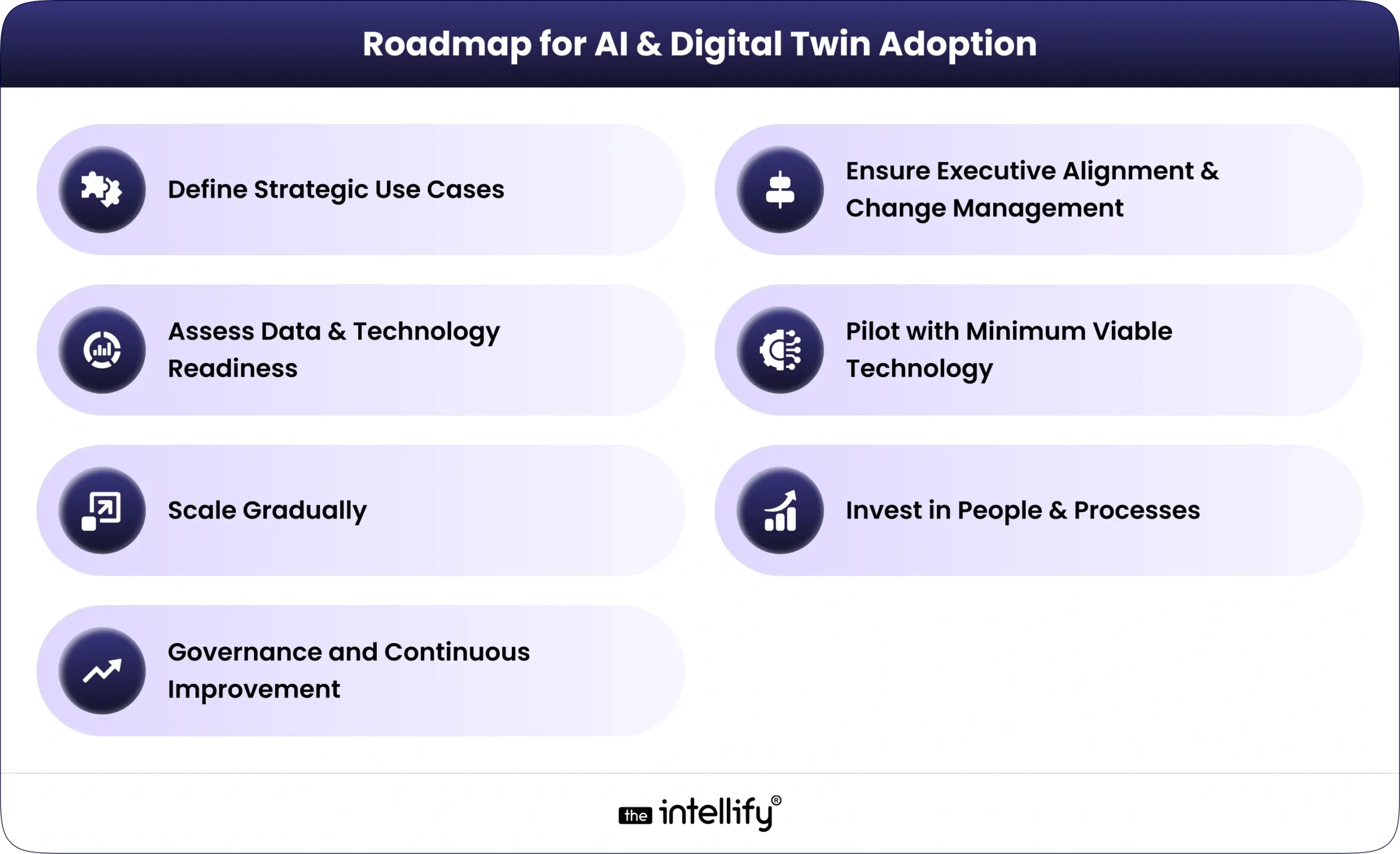

Roadmap for AI & Digital Twin Adoption

For U.S. CEOs, CTOs, and logistics executives looking to implement these technologies, a staged approach works best:

- Define Strategic Use Cases: Begin by identifying a high-value logistics problem and a “quick win” pilot. For example, target a region with chronic delays or a warehouse with low throughput. Decide on clear KPIs (on-time rate, cost per shipment) up front. This ensures the AI/twin project is tightly aligned to business outcomes.

- Ensure Executive Alignment & Change Management: Secure C-suite support and communicate goals across teams. McKinsey warns that tech ROI requires “reimagining the way you work in conjunction with technology, so involve operations, IT, and business units in redesigning processes. Set up a governance team (including data scientists, engineers, and ops managers) to oversee the deployment.

- Assess Data & Technology Readiness: Audit existing data sources: ERP/WMS systems, fleet telematics, IoT sensors in facilities, etc. Good AI and digital twins need clean, continuous data. You may need to upgrade GPS trackers on trucks, add RFID or cameras in warehouses, or consolidate multiple ERPs (as DHL did with Oracle Cloud). Invest in a solid data platform or cloud service to integrate these feeds. (If data is siloed or of poor quality, start with data cleansing and pipeline work.)

- Pilot with Minimum Viable Technology: Run a small-scale pilot. For instance, test an AI-based route optimizer on one delivery region, or create a digital twin of one warehouse zone and simulate throughput scenarios. Using modular solutions or “starter packs” (cloud-based AI tools, twin frameworks) can accelerate this. Measure results carefully and iterate. Early successes will build momentum.

- Scale Gradually: Once proven, expand to more routes, warehouses, or supply nodes. Integrate the pilot AI tool into broader systems (e.g., incorporate route AI into the TMS). Expand the digital twin model to other assets (e.g., from one DC twin to multiple DCs, linking them with transportation data). Leverage platforms (like AWS, Azure, Oracle) that offer built-in AI/twin capabilities to streamline roll-out.

- Invest in People & Processes: Train staff to work with AI outputs. For example, dispatchers may need training on using an AI-driven logistics dashboard; warehouse managment might need to interpret twin simulation reports. Consider hiring or upskilling data analysts who can fine-tune AI models. Maintain a feedback loop: use human expertise to correct AI errors and continually improve the models.

- Governance and Continuous Improvement: As systems go live, establish KPIs and dashboards for ongoing monitoring. Review performance regularly and watch for innovations (e.g., generative AI or 5G-enabled twins). Remain agile: McKinsey notes that as tech evolves, leaders should continuously reassess which tools to invest in.

By following this roadmap, starting with well-scoped pilots, building data foundations, and scaling up with executive suppsupportlogistics firms can master AI in logistics and digital twin implementation. Early adopters like DHL and Amazon demonstrate that even in a conservative industry, substantial gains await those who invest in these technologies.

Conclusion

AI-driven tools and digital twin models are rapidly transforming logistics and supply chain management. They offer a powerful competitive edge: higher efficiency, lower costs, greater visibility, and more resilient operations. With e-commerce growth and global disruptions showing no signs of slowing, U.S. logistics leaders cannot afford to sit on the sidelines. As the data shows, the AI in the logistics market is growing exponentially, and digital twins are moving from pilot experiments to enterprise strategy.

CEOs, CTOs, and COOs should view AI and digital twins not as future possibilities but as immediate imperatives. By following a clear adoption roadmap, aligning strategy, building data infrastructure, running pilot projects, and scaling proven solutions, companies can harness AI’s predictive power and the virtual testing capabilities of digital twins. The payoff will be substantial: smarter routing, autonomous fleets, 24/7 intelligent support, and supply chains that are adaptive, transparent, and optimized. In sum, integrating AI in logistics and digital twin technology is no longer a luxury but a necessity for any enterprise that aims to lead the next wave of logistics innovation.

Most Important FAQs

Q1. What is AI in logistics and why is it important?

AI in logistics uses data and automation to improve routes, cut costs, and boost delivery speed.

Q2. How do autonomous fleets improve logistics operations?

A: Autonomous fleets reduce human error, optimize fuel usage, and run deliveries 24/7.

Q3. What is digital twin technology in logistics?

A: A digital twin is a virtual replica of logistics assets, used to simulate, predict, and optimize operations.

Q4. How can AI cut logistics costs for companies?

A: AI reduces fuel, labor, and idle time costs through predictive analytics and smart routing.

Q5. What industries benefit most from AI in logistics?

A: E-commerce, retail, manufacturing, healthcare, and automotive gain faster delivery and efficiency.

Q6. What are the real-world examples of AI in logistics?

A: Companies like UPS and DHL use AI for route optimization, digital twins, and predictive maintenance.

Q7. How secure are autonomous fleets in logistics?

A: Autonomous fleets use AI-driven safety systems, sensors, and compliance with transport regulations.

Q8. Can digital twins predict supply chain disruptions?

A: Yes, digital twins simulate scenarios like demand spikes or delays to prevent costly disruptions.

Q9. What are the challenges of using AI in logistics?

A: Challenges include high setup costs, data integration issues, and workforce adoption.

Q10. What is the future of AI in logistics?

A: The future includes fully autonomous fleets, real-time digital twins, and AI agents managing end-to-end supply chains.